mirror of

https://gitcode.com/gh_mirrors/fe/FERPlus.git

synced 2025-12-30 05:22:26 +00:00

Add a sceript that generate training data and update the code to latest CNTK version.

This commit is contained in:

12

README.md

12

README.md

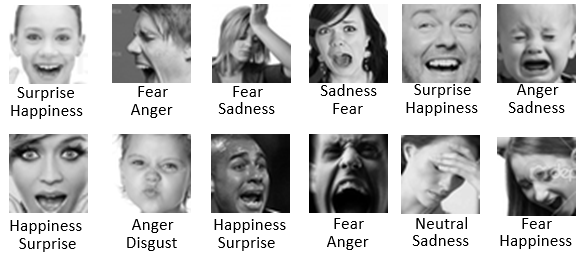

@@ -5,12 +5,18 @@ Here are some examples of the FER vs FER+ labels extracted from the abovemention

|

||||

|

||||

|

||||

|

||||

The new label file is named **_fer2013new.csv_** and contains the same number of rows as the original *fer2013.csv* label file with the same order, so that you infer which emotion tag belongs to which image. Since we can't host the actual image content, please find the original FER data set here: https://www.kaggle.com/c/challenges-in-representation-learning-facial-expression-recognition-challenge/data

|

||||

|

||||

We also provide a simple parsing code in Python to show how to parse the new labels and how to convert labels to a probability distribution (there are multiple ways to do it, we show an example). The parsing code is in [src/ReadFERPlus.py](https://github.com/Microsoft/FERPlus/tree/master/src)

|

||||

The new label file is named **_fer2013new.csv_** and contains the same number of rows as the original **_fer2013.csv_** label file with the same order, so that you infer which emotion tag belongs to which image. Since we can't host the actual image content, please find the original FER data set here: https://www.kaggle.com/c/challenges-in-representation-learning-facial-expression-recognition-challenge/data

|

||||

|

||||

The format of the CSV file is as follows: usage, neutral, happiness, surprise, sadness, anger, disgust, fear, contempt, unknown, NF. Columns "usage" is the same as the original FER label to differentiate between training, public test and private test sets. The other columns are the vote count for each emotion with the addition of unknown and NF (Not a Face).

|

||||

|

||||

|

||||

## Training data

|

||||

We provide a simple script `generate_training_data.py` in python that takes **_fer2013.csv_** and **_fer2013new.csv_** as inputs, merge both CSV files and export all the images into a png files for the trainer to process.

|

||||

|

||||

```

|

||||

python generate_training_data.py -d <dataset base folder> -fer <fer2013.csv path> -ferplus <fer2013new.csv path>

|

||||

```

|

||||

|

||||

## Training

|

||||

We also provide a training code with implementation for all the training modes (majority, probability, cross entropy and multi-label) described in https://arxiv.org/abs/1608.01041. The training code uses MS Cognitive Toolkit (formerly CNTK) available in: https://github.com/Microsoft/CNTK.

|

||||

|

||||

|

||||

@@ -1,130 +0,0 @@

|

||||

#

|

||||

# Copyright (c) Microsoft. All rights reserved.

|

||||

# Licensed under the MIT license. See LICENSE.md file in the project root for full license information.

|

||||

#

|

||||

|

||||

import sys

|

||||

import csv

|

||||

import argparse

|

||||

import numpy as np

|

||||

|

||||

def main(fer_label_file):

|

||||

"""

|

||||

Main entry points, it simply parse the new FER emotion label file and print its summary.

|

||||

|

||||

Parameters:

|

||||

fer_label_file: Path to the CSV label file.

|

||||

"""

|

||||

header, train_data, val_data, test_data = load_labels(fer_label_file)

|

||||

|

||||

# Print the summary using the emotion with max probability (majority voting).

|

||||

emotion_count = len(header)

|

||||

train_image_count_per_emotion = count_image_per_emotion(emotion_count, train_data)

|

||||

validation_image_count_per_emotion = count_image_per_emotion(emotion_count, val_data)

|

||||

test_image_count_per_emotion = count_image_per_emotion(emotion_count, test_data)

|

||||

|

||||

print("{0}\t{1}\t{2}\t{3}".format("".ljust(10), "Train", "Val", "Test"))

|

||||

|

||||

for index in range(emotion_count):

|

||||

print("{0}\t{1}\t{2}\t{3}".format(header[index].ljust(10),

|

||||

train_image_count_per_emotion[index],

|

||||

validation_image_count_per_emotion[index],

|

||||

test_image_count_per_emotion[index]))

|

||||

|

||||

def count_image_per_emotion(emotion_count, data):

|

||||

"""

|

||||

For summary display, a helper function that count the number of

|

||||

image per emotion.

|

||||

|

||||

Parameters:

|

||||

emotion_count: the number of emotions.

|

||||

data: the list of emotion for each image.

|

||||

"""

|

||||

image_count_per_emotion = [0] * emotion_count

|

||||

for emotion_prob in data:

|

||||

image_count_per_emotion[np.argmax(emotion_prob)] += 1

|

||||

|

||||

return image_count_per_emotion

|

||||

|

||||

def load_labels(fer_label_file):

|

||||

"""

|

||||

Load and parse the label CSV file, contains the new FER label.

|

||||

|

||||

Parameters:

|

||||

fer_label_file: Path to the CSV label file.

|

||||

"""

|

||||

train_data = []

|

||||

val_data = []

|

||||

test_data = []

|

||||

|

||||

with open(fer_label_file) as label_file:

|

||||

emotion_label = csv.reader(label_file)

|

||||

emotion_label_itr = iter(emotion_label)

|

||||

|

||||

# First row is the header

|

||||

header = next(emotion_label_itr)

|

||||

header = header[1:len(header)]

|

||||

|

||||

# Split into train, validate and test set.

|

||||

for row in emotion_label_itr:

|

||||

emotion_raw = map(float, row[1:len(row)])

|

||||

if row[0] == "Training":

|

||||

train_data.append(process_data(emotion_raw))

|

||||

elif row[0] == "PublicTest":

|

||||

val_data.append(process_data(emotion_raw))

|

||||

elif row[0] == "PrivateTest":

|

||||

test_data.append(process_data(emotion_raw))

|

||||

else:

|

||||

raise ValueError('Invalid usage')

|

||||

|

||||

return header, train_data, val_data, test_data

|

||||

|

||||

def process_data(emotion_raw):

|

||||

"""

|

||||

Takes the raw votes for each emotion and return the probability distribution.

|

||||

We ignore outliers and distribution that has one vote per emotion.

|

||||

|

||||

Parameters:

|

||||

emotion_raw: Array of vote count per emotion from the label file.

|

||||

"""

|

||||

size = len(emotion_raw)

|

||||

emotion_unknown = [0.0] * size

|

||||

emotion_unknown[-2] = 1.0

|

||||

|

||||

# remove emotions with a single vote (outlier removal)

|

||||

for i in range(size):

|

||||

if emotion_raw[i] < 1.0 + sys.float_info.epsilon:

|

||||

emotion_raw[i] = 0.0

|

||||

|

||||

sum_list = sum(emotion_raw)

|

||||

emotion = [0.0] * size

|

||||

|

||||

sum_part = 0

|

||||

count = 0

|

||||

valid_emotion = True

|

||||

while sum_part < 0.75*sum_list and count < 3 and valid_emotion:

|

||||

maxval = max(emotion_raw)

|

||||

for i in range(size):

|

||||

if emotion_raw[i] == maxval:

|

||||

emotion[i] = maxval

|

||||

emotion_raw[i] = 0

|

||||

sum_part += emotion[i]

|

||||

count += 1

|

||||

if i >= 8: # unknown or non-face share same number of max votes

|

||||

valid_emotion = False

|

||||

if sum(emotion) > maxval: # there have been other emotions ahead of unknown or non-face

|

||||

emotion[i] = 0

|

||||

count -= 1

|

||||

break

|

||||

if sum(emotion) <= 0.5*sum_list or count > 3: # less than 50% of the votes are integrated, or there are too many emotions, we'd better discard this example

|

||||

emotion = emotion_unknown # force setting as unknown

|

||||

|

||||

return [float(i)/sum(emotion) for i in emotion]

|

||||

|

||||

if __name__ == "__main__":

|

||||

parser = argparse.ArgumentParser()

|

||||

parser.add_argument("-f", "--fer_label_file", type = str, help = "FER 2013 update label file.", required = True)

|

||||

|

||||

args = parser.parse_args()

|

||||

|

||||

main(args.fer_label_file)

|

||||

83

src/generate_training_data.py

Normal file

83

src/generate_training_data.py

Normal file

@@ -0,0 +1,83 @@

|

||||

#

|

||||

# Copyright (c) Microsoft. All rights reserved.

|

||||

# Licensed under the MIT license. See LICENSE.md file in the project root for full license information.

|

||||

#

|

||||

|

||||

import os

|

||||

import csv

|

||||

import argparse

|

||||

import numpy as np

|

||||

from itertools import islice

|

||||

from PIL import Image

|

||||

|

||||

# List of folders for training, validation and test.

|

||||

folder_names = {'Training' : 'FER2013Train',

|

||||

'PublicTest' : 'FER2013Valid',

|

||||

'PrivateTest': 'FER2013Test'}

|

||||

|

||||

def str_to_image(image_blob):

|

||||

''' Convert a string blob to an image object. '''

|

||||

image_string = image_blob.split(' ')

|

||||

image_data = np.asarray(image_string, dtype=np.uint8).reshape(48,48)

|

||||

return Image.fromarray(image_data)

|

||||

|

||||

def main(base_folder, fer_path, ferplus_path):

|

||||

'''

|

||||

Generate PNG image files from the combined fer2013.csv and fer2013new.csv file. The generated files

|

||||

are stored in their corresponding folder for the trainer to use.

|

||||

|

||||

Args:

|

||||

base_folder(str): The base folder that contains 'FER2013Train', 'FER2013Valid' and 'FER2013Test'

|

||||

subfolder.

|

||||

fer_path(str): The full path of fer2013.csv file.

|

||||

ferplus_path(str): The full path of fer2013new.csv file.

|

||||

'''

|

||||

|

||||

print("Start generating ferplus images.")

|

||||

|

||||

for key, value in folder_names.items():

|

||||

folder_path = os.path.join(base_folder, value)

|

||||

if not os.path.exists(folder_path):

|

||||

os.makedirs(folder_path)

|

||||

|

||||

ferplus_entries = []

|

||||

with open(ferplus_path,'r') as csvfile:

|

||||

ferplus_rows = csv.reader(csvfile, delimiter=',')

|

||||

for row in islice(ferplus_rows, 1, None):

|

||||

ferplus_entries.append(row)

|

||||

|

||||

index = 0

|

||||

with open(fer_path,'r') as csvfile:

|

||||

fer_rows = csv.reader(csvfile, delimiter=',')

|

||||

for row in islice(fer_rows, 1, None):

|

||||

ferplus_row = ferplus_entries[index]

|

||||

file_name = ferplus_row[1].strip()

|

||||

if len(file_name) > 0:

|

||||

image = str_to_image(row[1])

|

||||

image_path = os.path.join(base_folder, folder_names[row[2]], file_name)

|

||||

image.save(image_path, compress_level=0)

|

||||

index += 1

|

||||

|

||||

print("Done...")

|

||||

|

||||

if __name__ == "__main__":

|

||||

parser = argparse.ArgumentParser()

|

||||

parser.add_argument("-d",

|

||||

"--base_folder",

|

||||

type = str,

|

||||

help = "Base folder containing the training, validation and testing folder.",

|

||||

required = True)

|

||||

parser.add_argument("-fer",

|

||||

"--fer_path",

|

||||

type = str,

|

||||

help = "Path to the original fer2013.csv file.",

|

||||

required = True)

|

||||

|

||||

parser.add_argument("-ferplus",

|

||||

"--ferplus_path",

|

||||

type = str,

|

||||

help = "Path to the new fer2013new.csv file.",

|

||||

required = True)

|

||||

|

||||

args = parser.parse_args()

|

||||

main(args.base_folder, args.fer_path, args.ferplus_path)

|

||||

@@ -49,25 +49,25 @@ class VGG13(object):

|

||||

|

||||

def _create_model(self, num_classes):

|

||||

with ct.default_options(activation=ct.relu, init=ct.glorot_uniform()):

|

||||

model = ct.Sequential([

|

||||

ct.For(range(2), lambda i: [

|

||||

ct.Convolution((3,3), [64,128][i], pad=True, name='conv{}-1'.format(i+1)),

|

||||

ct.Convolution((3,3), [64,128][i], pad=True, name='conv{}-2'.format(i+1)),

|

||||

ct.MaxPooling((2,2), strides=(2,2), name='pool{}-1'.format(i+1)),

|

||||

ct.Dropout(0.25, name='drop{}-1'.format(i+1))

|

||||

model = ct.layers.Sequential([

|

||||

ct.layers.For(range(2), lambda i: [

|

||||

ct.layers.Convolution((3,3), [64,128][i], pad=True, name='conv{}-1'.format(i+1)),

|

||||

ct.layers.Convolution((3,3), [64,128][i], pad=True, name='conv{}-2'.format(i+1)),

|

||||

ct.layers.MaxPooling((2,2), strides=(2,2), name='pool{}-1'.format(i+1)),

|

||||

ct.layers.Dropout(0.25, name='drop{}-1'.format(i+1))

|

||||

]),

|

||||

ct.For(range(2), lambda i: [

|

||||

ct.Convolution((3,3), [256,256][i], pad=True, name='conv{}-1'.format(i+3)),

|

||||

ct.Convolution((3,3), [256,256][i], pad=True, name='conv{}-2'.format(i+3)),

|

||||

ct.Convolution((3,3), [256,256][i], pad=True, name='conv{}-3'.format(i+3)),

|

||||

ct.MaxPooling((2,2), strides=(2,2), name='pool{}-1'.format(i+3)),

|

||||

ct.Dropout(0.25, name='drop{}-1'.format(i+3))

|

||||

ct.layers.For(range(2), lambda i: [

|

||||

ct.layers.Convolution((3,3), [256,256][i], pad=True, name='conv{}-1'.format(i+3)),

|

||||

ct.layers.Convolution((3,3), [256,256][i], pad=True, name='conv{}-2'.format(i+3)),

|

||||

ct.layers.Convolution((3,3), [256,256][i], pad=True, name='conv{}-3'.format(i+3)),

|

||||

ct.layers.MaxPooling((2,2), strides=(2,2), name='pool{}-1'.format(i+3)),

|

||||

ct.layers.Dropout(0.25, name='drop{}-1'.format(i+3))

|

||||

]),

|

||||

ct.For(range(2), lambda i: [

|

||||

ct.Dense(1024, activation=None, name='fc{}'.format(i+5)),

|

||||

ct.Activation(activation=ct.relu, name='relu{}'.format(i+5)),

|

||||

ct.Dropout(0.5, name='drop{}'.format(i+5))

|

||||

ct.layers.For(range(2), lambda i: [

|

||||

ct.layers.Dense(1024, activation=None, name='fc{}'.format(i+5)),

|

||||

ct.layers.Activation(activation=ct.relu, name='relu{}'.format(i+5)),

|

||||

ct.layers.Dropout(0.5, name='drop{}'.format(i+5))

|

||||

]),

|

||||

ct.Dense(num_classes, activation=None, name='output')

|

||||

ct.layers.Dense(num_classes, activation=None, name='output')

|

||||

])

|

||||

return model

|

||||

|

||||

17

src/train.py

17

src/train.py

@@ -15,9 +15,6 @@ import logging

|

||||

from models import *

|

||||

from ferplus import *

|

||||

|

||||

from cntk import Trainer

|

||||

from cntk.learner import sgd, momentum_sgd, learning_rate_schedule, UnitType, momentum_as_time_constant_schedule

|

||||

from cntk.ops import cross_entropy_with_softmax, classification_error

|

||||

import cntk as ct

|

||||

|

||||

emotion_table = {'neutral' : 0,

|

||||

@@ -67,8 +64,8 @@ def main(base_folder, training_mode='majority', model_name='VGG13', max_epochs =

|

||||

model = build_model(num_classes, model_name)

|

||||

|

||||

# set the input variables.

|

||||

input_var = ct.input_variable((1, model.input_height, model.input_width), np.float32)

|

||||

label_var = ct.input_variable((num_classes), np.float32)

|

||||

input_var = ct.input((1, model.input_height, model.input_width), np.float32)

|

||||

label_var = ct.input((num_classes), np.float32)

|

||||

|

||||

# read FER+ dataset.

|

||||

logging.info("Loading data...")

|

||||

@@ -92,16 +89,16 @@ def main(base_folder, training_mode='majority', model_name='VGG13', max_epochs =

|

||||

# Training config

|

||||

lr_per_minibatch = [model.learning_rate]*20 + [model.learning_rate / 2.0]*20 + [model.learning_rate / 10.0]

|

||||

mm_time_constant = -minibatch_size/np.log(0.9)

|

||||

lr_schedule = learning_rate_schedule(lr_per_minibatch, unit=UnitType.minibatch, epoch_size=epoch_size)

|

||||

mm_schedule = momentum_as_time_constant_schedule(mm_time_constant)

|

||||

lr_schedule = ct.learning_rate_schedule(lr_per_minibatch, unit=ct.UnitType.minibatch, epoch_size=epoch_size)

|

||||

mm_schedule = ct.momentum_as_time_constant_schedule(mm_time_constant)

|

||||

|

||||

# loss and error cost

|

||||

train_loss = cost_func(training_mode, pred, label_var)

|

||||

pe = classification_error(z, label_var)

|

||||

pe = ct.classification_error(z, label_var)

|

||||

|

||||

# construct the trainer

|

||||

learner = momentum_sgd(z.parameters, lr_schedule, mm_schedule)

|

||||

trainer = Trainer(z, (train_loss, pe), learner)

|

||||

learner = ct.momentum_sgd(z.parameters, lr_schedule, mm_schedule)

|

||||

trainer = ct.Trainer(z, (train_loss, pe), learner)

|

||||

|

||||

# Get minibatches of images to train with and perform model training

|

||||

max_val_accuracy = 0.0

|

||||

|

||||

Reference in New Issue

Block a user