mirror of

https://github.com/deepinsight/insightface.git

synced 2025-12-29 23:52:26 +00:00

a big tree refine

This commit is contained in:

2

.gitignore

vendored

2

.gitignore

vendored

@@ -99,3 +99,5 @@ ENV/

|

||||

|

||||

# mypy

|

||||

.mypy_cache/

|

||||

|

||||

.DS_Store

|

||||

|

||||

317

README.md

317

README.md

@@ -1,6 +1,10 @@

|

||||

|

||||

# InsightFace: 2D and 3D Face Analysis Project

|

||||

|

||||

<div align="left">

|

||||

<img src="https://insightface.ai/assets/img/custom/logo3.jpg" width="240"/>

|

||||

</div>

|

||||

|

||||

By [Jia Guo](mailto:guojia@gmail.com?subject=[GitHub]%20InsightFace%20Project) and [Jiankang Deng](https://jiankangdeng.github.io/)

|

||||

|

||||

## Top News

|

||||

@@ -21,244 +25,62 @@ The training data containing the annotation (and the models trained with these d

|

||||

|

||||

## Introduction

|

||||

|

||||

InsightFace is an open source 2D&3D deep face analysis toolbox, mainly based on MXNet and PyTorch.

|

||||

[InsightFace](https://insightface.ai) is an open source 2D&3D deep face analysis toolbox, mainly based on PyTorch and MXNet. Please check our [website](https://insightface.ai) for detail.

|

||||

|

||||

The master branch works with **MXNet 1.2 to 1.6**, **PyTorch 1.6+**, with **Python 3.x**.

|

||||

The master branch works with **PyTorch 1.6+** and/or **MXNet=1.6-1.8**, with **Python 3.x**.

|

||||

|

||||

InsightFace efficiently implements a rich variety of state of the art algorithms of face recognition, face detection and face alignment, which optimized for both training and deployment.

|

||||

|

||||

|

||||

## ArcFace Video Demo

|

||||

### ArcFace Video Demo

|

||||

|

||||

[](https://www.youtube.com/watch?v=y-D1tReryGA&t=81s)

|

||||

|

||||

Please click the image to watch the Youtube video. For Bilibili users, click [here](https://www.bilibili.com/video/av38041494?from=search&seid=11501833604850032313).

|

||||

|

||||

## Recent Update

|

||||

|

||||

**`2021-06-05`**: We launch a [Masked Face Recognition Challenge & Workshop](https://github.com/deepinsight/insightface/tree/master/challenges/iccv21-mfr) on ICCV 2021.

|

||||

|

||||

**`2021-05-15`**: We released an efficient high accuracy face detection approach called [SCRFD](https://github.com/deepinsight/insightface/tree/master/detection/scrfd).

|

||||

|

||||

**`2021-04-18`**: We achieved Rank-4th on NIST-FRVT 1:1, see [leaderboard](https://pages.nist.gov/frvt/html/frvt11.html).

|

||||

|

||||

**`2021-03-13`**: We have released our official ArcFace PyTorch implementation, see [here](https://github.com/deepinsight/insightface/tree/master/recognition/arcface_torch).

|

||||

|

||||

**`2021-03-09`**: [Tips](https://github.com/deepinsight/insightface/issues/1426) for training large-scale face recognition model, such as millions of IDs(classes).

|

||||

|

||||

**`2021-02-21`**: We provide a simple face mask renderer [here](https://github.com/deepinsight/insightface/tree/master/recognition/tools) which can be used as a data augmentation tool while training face recognition models.

|

||||

|

||||

**`2021-01-20`**: [OneFlow](https://github.com/Oneflow-Inc/oneflow) based implementation of ArcFace and Partial-FC, [here](https://github.com/deepinsight/insightface/tree/master/recognition/oneflow_face).

|

||||

|

||||

**`2020-10-13`**: A new training method and one large training set(360K IDs) were released [here](https://github.com/deepinsight/insightface/tree/master/recognition/partial_fc) by DeepGlint.

|

||||

|

||||

**`2020-10-09`**: We opened a large scale recognition test benchmark [IFRT](https://github.com/deepinsight/insightface/tree/master/challenges/IFRT)

|

||||

|

||||

**`2020-08-01`**: We released lightweight facial landmark models with fast coordinate regression(106 points). See detail [here](https://github.com/deepinsight/insightface/tree/master/alignment/coordinateReg).

|

||||

|

||||

**`2020-04-27`**: InsightFace pretrained models and MS1M-Arcface are now specified as the only external training dataset, for iQIYI iCartoonFace challenge, see detail [here](http://challenge.ai.iqiyi.com/detail?raceId=5def71b4e9fcf68aef76a75e).

|

||||

|

||||

**`2020.02.21`**: Instant discussion group created on QQ with group-id: 711302608. For English developers, see install tutorial [here](https://github.com/deepinsight/insightface/issues/1069).

|

||||

|

||||

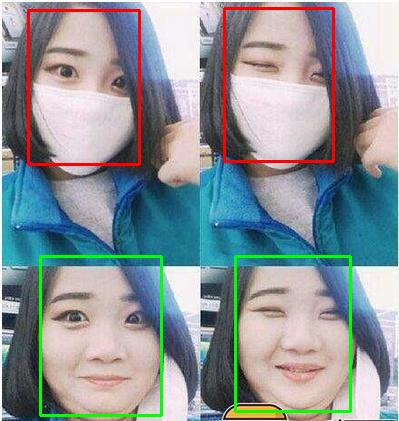

**`2020.02.16`**: RetinaFace now can detect faces with mask, for anti-CoVID19, see detail [here](https://github.com/deepinsight/insightface/tree/master/detection/RetinaFaceAntiCov)

|

||||

|

||||

**`2019.08.10`**: We achieved 2nd place at [WIDER Face Detection Challenge 2019](http://wider-challenge.org/2019.html).

|

||||

|

||||

**`2019.05.30`**: [Presentation at cvmart](https://pan.baidu.com/s/1v9fFHBJ8Q9Kl9Z6GwhbY6A)

|

||||

|

||||

**`2019.04.30`**: Our Face detector ([RetinaFace](https://github.com/deepinsight/insightface/tree/master/detection/RetinaFace)) obtains state-of-the-art results on [the WiderFace dataset](http://shuoyang1213.me/WIDERFACE/WiderFace_Results.html).

|

||||

|

||||

**`2019.04.14`**: We will launch a [Light-weight Face Recognition challenge/workshop](https://github.com/deepinsight/insightface/tree/master/challenges/iccv19-lfr) on ICCV 2019.

|

||||

|

||||

**`2019.04.04`**: Arcface achieved state-of-the-art performance (7/109) on the NIST Face Recognition Vendor Test (FRVT) (1:1 verification)

|

||||

[report](https://www.nist.gov/sites/default/files/documents/2019/04/04/frvt_report_2019_04_04.pdf) (name: Imperial-000 and Imperial-001). Our solution is based on [MS1MV2+DeepGlintAsian, ResNet100, ArcFace loss].

|

||||

|

||||

**`2019.02.08`**: Please check [https://github.com/deepinsight/insightface/tree/master/recognition/ArcFace](https://github.com/deepinsight/insightface/tree/master/recognition/ArcFace) for our parallel training code which can easily and efficiently support one million identities on a single machine (8* 1080ti).

|

||||

|

||||

**`2018.12.13`**: Inference acceleration [TVM-Benchmark](https://github.com/deepinsight/insightface/wiki/TVM-Benchmark).

|

||||

|

||||

**`2018.10.28`**: Light-weight attribute model [Gender-Age](https://github.com/deepinsight/insightface/tree/master/gender-age). About 1MB, 10ms on single CPU core. Gender accuracy 96% on validation set and 4.1 age MAE.

|

||||

|

||||

**`2018.10.16`**: We achieved state-of-the-art performance on [Trillionpairs](http://trillionpairs.deepglint.com/results) (name: nttstar) and [IQIYI_VID](http://challenge.ai.iqiyi.com/detail?raceId=5afc36639689443e8f815f9e) (name: WitcheR).

|

||||

|

||||

|

||||

## Contents

|

||||

[Deep Face Recognition](#deep-face-recognition)

|

||||

- [Introduction](#introduction)

|

||||

- [Training Data](#training-data)

|

||||

- [Train](#train)

|

||||

- [Pretrained Models](#pretrained-models)

|

||||

- [Verification Results On Combined Margin](#verification-results-on-combined-margin)

|

||||

- [Test on MegaFace](#test-on-megaface)

|

||||

- [512-D Feature Embedding](#512-d-feature-embedding)

|

||||

- [Third-party Re-implementation](#third-party-re-implementation)

|

||||

## Projects

|

||||

|

||||

[Face Detection](#face-detection)

|

||||

- [RetinaFace](#retinaface)

|

||||

- [RetinaFaceAntiCov](#retinafaceanticov)

|

||||

The [page](https://insightface.ai/projects) on InsightFace website also describes all supported projects in InsightFace.

|

||||

|

||||

[Face Alignment](#face-alignment)

|

||||

- [DenseUNet](#denseunet)

|

||||

- [CoordinateReg](#coordinatereg)

|

||||

You may also interested in some [challenges](https://insightface.ai/challenges) hold by InsightFace.

|

||||

|

||||

|

||||

[Citation](#citation)

|

||||

|

||||

[Contact](#contact)

|

||||

|

||||

## Deep Face Recognition

|

||||

## Face Recognition

|

||||

|

||||

### Introduction

|

||||

|

||||

In this module, we provide training data, network settings and loss designs for deep face recognition.

|

||||

The training data includes, but not limited to the cleaned MS1M, VGG2 and CASIA-Webface datasets, which were already packed in MXNet binary format.

|

||||

The network backbones include ResNet, MobilefaceNet, MobileNet, InceptionResNet_v2, DenseNet, etc..

|

||||

The loss functions include Softmax, SphereFace, CosineFace, ArcFace, Sub-Center ArcFace and Triplet (Euclidean/Angular) Loss.

|

||||

|

||||

You can check the detail page of our work [ArcFace](https://github.com/deepinsight/insightface/tree/master/recognition/ArcFace)(which accepted in CVPR-2019) and [SubCenter-ArcFace](https://github.com/deepinsight/insightface/tree/master/recognition/SubCenter-ArcFace)(which accepted in ECCV-2020).

|

||||

The supported methods are as follows:

|

||||

|

||||

|

||||

- [x] [ArcFace_mxnet (CVPR'2019)](recognition/arcface_mxnet)

|

||||

- [x] [ArcFace_torch (CVPR'2019)](recognition/arcface_torch)

|

||||

- [x] [SubCenter ArcFace (ECCV'2020)](recognition/subcenter_arcface)

|

||||

- [x] [PartialFC_mxnet (Arxiv'2020)](recognition/partial_fc)

|

||||

- [x] [PartialFC_torch (Arxiv'2020)](recognition/arcface_torch)

|

||||

- [x] [VPL (CVPR'2021)](recognition/vpl)

|

||||

- [x] [OneFlow_face](recognition/oneflow_face)

|

||||

|

||||

Our method, ArcFace, was initially described in an [arXiv technical report](https://arxiv.org/abs/1801.07698). By using this module, you can simply achieve LFW 99.83%+ and Megaface 98%+ by a single model. This module can help researcher/engineer to develop deep face recognition algorithms quickly by only two steps: download the binary dataset and run the training script.

|

||||

|

||||

### Training Data

|

||||

|

||||

All face images are aligned by ficial five landmarks and cropped to 112x112:

|

||||

|

||||

Please check [Dataset-Zoo](https://github.com/deepinsight/insightface/wiki/Dataset-Zoo) for detail information and dataset downloading.

|

||||

Commonly used network backbones are included in most of the methods, such as IResNet, MobilefaceNet, MobileNet, InceptionResNet_v2, DenseNet, etc..

|

||||

|

||||

|

||||

* Please check *recognition/tools/face2rec2.py* on how to build a binary face dataset. You can either choose *MTCNN* or *RetinaFace* to align the faces.

|

||||

### Datasets

|

||||

|

||||

### Train

|

||||

The training data includes, but not limited to the cleaned MS1M, VGG2 and CASIA-Webface datasets, which were already packed in MXNet binary format. Please [dataset](recognition/_dataset_) page for detail.

|

||||

|

||||

1. Install `MXNet` with GPU support (Python 3.X).

|

||||

|

||||

```

|

||||

pip install mxnet-cu101 # which should match your installed cuda version

|

||||

```

|

||||

|

||||

2. Clone the InsightFace repository. We call the directory insightface as *`INSIGHTFACE_ROOT`*.

|

||||

|

||||

```

|

||||

git clone --recursive https://github.com/deepinsight/insightface.git

|

||||

```

|

||||

|

||||

3. Download the training set (`MS1M-Arcface`) and place it in *`$INSIGHTFACE_ROOT/recognition/datasets/`*. Each training dataset includes at least following 6 files:

|

||||

|

||||

```Shell

|

||||

faces_emore/

|

||||

train.idx

|

||||

train.rec

|

||||

property

|

||||

lfw.bin

|

||||

cfp_fp.bin

|

||||

agedb_30.bin

|

||||

```

|

||||

|

||||

The first three files are the training dataset while the last three files are verification sets.

|

||||

|

||||

4. Train deep face recognition models.

|

||||

In this part, we assume you are in the directory *`$INSIGHTFACE_ROOT/recognition/ArcFace/`*.

|

||||

|

||||

Place and edit config file:

|

||||

```Shell

|

||||

cp sample_config.py config.py

|

||||

vim config.py # edit dataset path etc..

|

||||

```

|

||||

|

||||

We give some examples below. Our experiments were conducted on the Tesla P40 GPU.

|

||||

|

||||

(1). Train ArcFace with LResNet100E-IR.

|

||||

|

||||

```Shell

|

||||

CUDA_VISIBLE_DEVICES='0,1,2,3' python -u train.py --network r100 --loss arcface --dataset emore

|

||||

```

|

||||

It will output verification results of *LFW*, *CFP-FP* and *AgeDB-30* every 2000 batches. You can check all options in *config.py*.

|

||||

This model can achieve *LFW 99.83+* and *MegaFace 98.3%+*.

|

||||

|

||||

(2). Train CosineFace with LResNet50E-IR.

|

||||

|

||||

```Shell

|

||||

CUDA_VISIBLE_DEVICES='0,1,2,3' python -u train.py --network r50 --loss cosface --dataset emore

|

||||

```

|

||||

|

||||

(3). Train Softmax with LMobileNet-GAP.

|

||||

|

||||

```Shell

|

||||

CUDA_VISIBLE_DEVICES='0,1,2,3' python -u train.py --network m1 --loss softmax --dataset emore

|

||||

```

|

||||

|

||||

(4). Fine-turn the above Softmax model with Triplet loss.

|

||||

|

||||

```Shell

|

||||

CUDA_VISIBLE_DEVICES='0,1,2,3' python -u train.py --network m1 --loss triplet --lr 0.005 --pretrained ./models/m1-softmax-emore,1

|

||||

```

|

||||

|

||||

(5). Training in model parallel acceleration.

|

||||

|

||||

```Shell

|

||||

CUDA_VISIBLE_DEVICES='0,1,2,3' python -u train_parall.py --network r100 --loss arcface --dataset emore

|

||||

```

|

||||

|

||||

5. Verification results.

|

||||

|

||||

*LResNet100E-IR* network trained on *MS1M-Arcface* dataset with ArcFace loss:

|

||||

|

||||

| Method | LFW(%) | CFP-FP(%) | AgeDB-30(%) |

|

||||

| ------- | ------ | --------- | ----------- |

|

||||

| Ours | 99.80+ | 98.0+ | 98.20+ |

|

||||

### Evaluation

|

||||

|

||||

We provide standard IJB and Megaface evaluation pipelines in [evaluation](recognition/_evaluation_)

|

||||

|

||||

|

||||

### Pretrained Models

|

||||

|

||||

You can use `$INSIGHTFACE_ROOT/recognition/arcface_torch/eval/verification.py` to test all the pre-trained models.

|

||||

|

||||

**Please check [Model-Zoo](https://github.com/deepinsight/insightface/wiki/Model-Zoo) for more pretrained models.**

|

||||

|

||||

|

||||

|

||||

### Verification Results on Combined Margin

|

||||

|

||||

A combined margin method was proposed as a function of target logits value and original `θ`:

|

||||

|

||||

```

|

||||

COM(θ) = cos(m_1*θ+m_2) - m_3

|

||||

```

|

||||

|

||||

For training with `m1=1.0, m2=0.3, m3=0.2`, run following command:

|

||||

```

|

||||

CUDA_VISIBLE_DEVICES='0,1,2,3' python -u train.py --network r100 --loss combined --dataset emore

|

||||

```

|

||||

|

||||

Results by using ``MS1M-IBUG(MS1M-V1)``

|

||||

|

||||

| Method | m1 | m2 | m3 | LFW | CFP-FP | AgeDB-30 |

|

||||

| ---------------- | ---- | ---- | ---- | ----- | ------ | -------- |

|

||||

| W&F Norm Softmax | 1 | 0 | 0 | 99.28 | 88.50 | 95.13 |

|

||||

| SphereFace | 1.5 | 0 | 0 | 99.76 | 94.17 | 97.30 |

|

||||

| CosineFace | 1 | 0 | 0.35 | 99.80 | 94.4 | 97.91 |

|

||||

| ArcFace | 1 | 0.5 | 0 | 99.83 | 94.04 | 98.08 |

|

||||

| Combined Margin | 1.2 | 0.4 | 0 | 99.80 | 94.08 | 98.05 |

|

||||

| Combined Margin | 1.1 | 0 | 0.35 | 99.81 | 94.50 | 98.08 |

|

||||

| Combined Margin | 1 | 0.3 | 0.2 | 99.83 | 94.51 | 98.13 |

|

||||

| Combined Margin | 0.9 | 0.4 | 0.15 | 99.83 | 94.20 | 98.16 |

|

||||

|

||||

### Test on MegaFace

|

||||

|

||||

Please check *`$INSIGHTFACE_ROOT/evaluation/megaface/`* to evaluate the model accuracy on Megaface. All aligned images were already provided.

|

||||

|

||||

|

||||

### 512-D Feature Embedding

|

||||

|

||||

In this part, we assume you are in the directory *`$INSIGHTFACE_ROOT/deploy/`*. The input face image should be generally centre cropped. We use *RNet+ONet* of *MTCNN* to further align the image before sending it to the feature embedding network.

|

||||

|

||||

1. Prepare a pre-trained model.

|

||||

2. Put the model under *`$INSIGHTFACE_ROOT/models/`*. For example, *`$INSIGHTFACE_ROOT/models/model-r100-ii`*.

|

||||

3. Run the test script *`$INSIGHTFACE_ROOT/deploy/test.py`*.

|

||||

|

||||

For single cropped face image(112x112), total inference time is only 17ms on our testing server(Intel E5-2660 @ 2.00GHz, Tesla M40, *LResNet34E-IR*).

|

||||

|

||||

### Third-party Re-implementation

|

||||

### Third-party Re-implementation of ArcFace

|

||||

|

||||

- TensorFlow: [InsightFace_TF](https://github.com/auroua/InsightFace_TF)

|

||||

- TensorFlow: [tf-insightface](https://github.com/AIInAi/tf-insightface)

|

||||

@@ -272,39 +94,43 @@ For single cropped face image(112x112), total inference time is only 17ms on our

|

||||

|

||||

## Face Detection

|

||||

|

||||

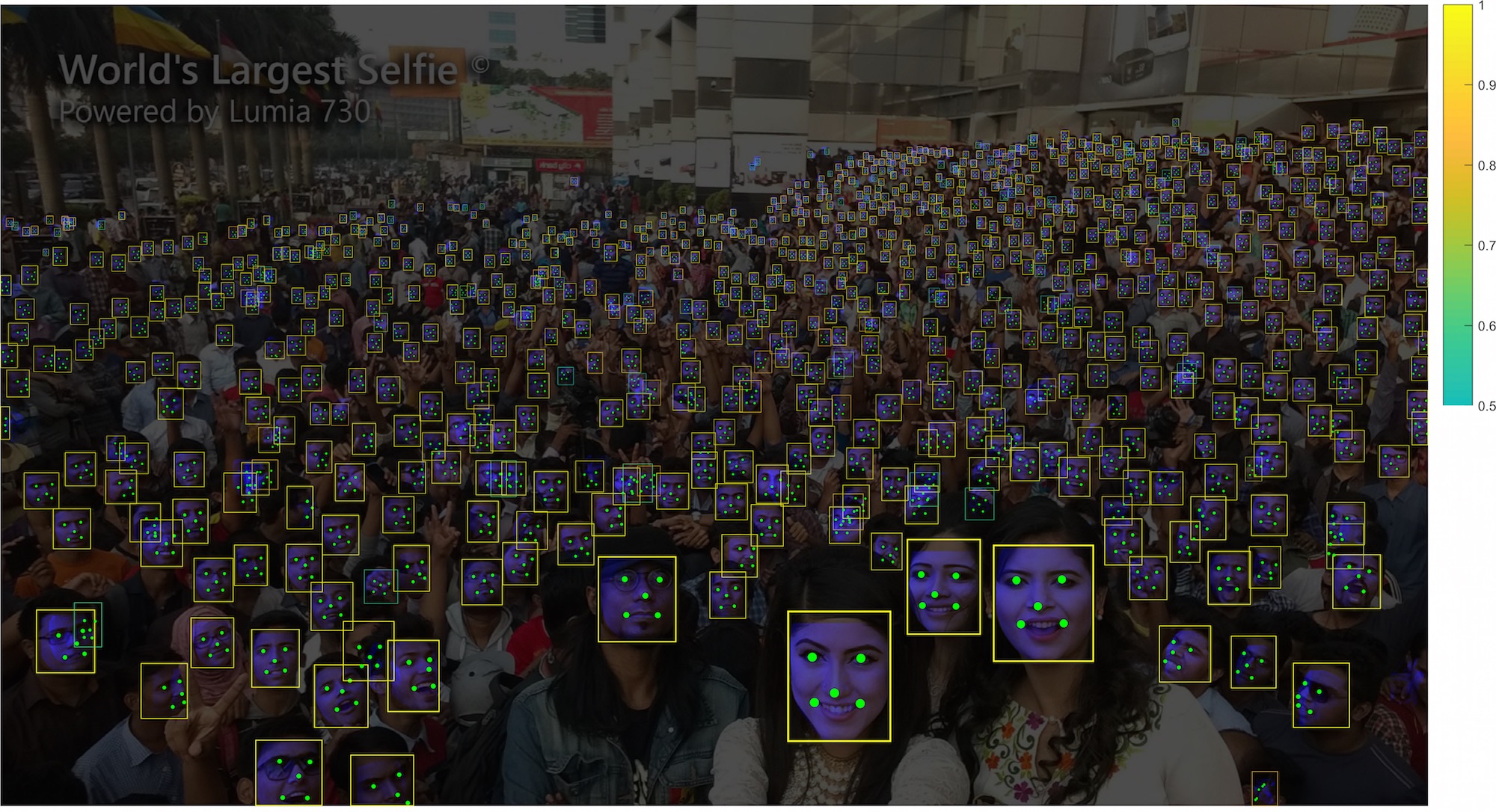

### RetinaFace

|

||||

### Introduction

|

||||

|

||||

RetinaFace is a practical single-stage [SOTA](http://shuoyang1213.me/WIDERFACE/WiderFace_Results.html) face detector which is initially introduced in [arXiv technical report](https://arxiv.org/abs/1905.00641) and then accepted by [CVPR 2020](https://openaccess.thecvf.com/content_CVPR_2020/html/Deng_RetinaFace_Single-Shot_Multi-Level_Face_Localisation_in_the_Wild_CVPR_2020_paper.html). We provide training code, training dataset, pretrained models and evaluation scripts.

|

||||

|

||||

|

||||

|

||||

Please check [RetinaFace](https://github.com/deepinsight/insightface/tree/master/detection/RetinaFace) for detail.

|

||||

|

||||

### RetinaFaceAntiCov

|

||||

|

||||

RetinaFaceAntiCov is an experimental module to identify face boxes with masks. Please check [RetinaFaceAntiCov](https://github.com/deepinsight/insightface/tree/master/detection/RetinaFaceAntiCov) for detail.

|

||||

|

||||

|

||||

<div align="left">

|

||||

<img src="https://insightface.ai/assets/img/github/11513D05.jpg" width="640"/>

|

||||

</div>

|

||||

|

||||

In this module, we provide training data with annotation, network settings and loss designs for face detection training, evaluation and inference.

|

||||

|

||||

The supported methods are as follows:

|

||||

|

||||

- [x] [RetinaFace (CVPR'2020)](detection/retinaface)

|

||||

- [x] [SCRFD (Arxiv'2021)](detection/scrfd)

|

||||

|

||||

[RetinaFace](detection/retinaface) is a practical single-stage face detector which is accepted by [CVPR 2020](https://openaccess.thecvf.com/content_CVPR_2020/html/Deng_RetinaFace_Single-Shot_Multi-Level_Face_Localisation_in_the_Wild_CVPR_2020_paper.html). We provide training code, training dataset, pretrained models and evaluation scripts.

|

||||

|

||||

[SCRFD](detection/scrfd) is an efficient high accuracy face detection approach which is initialy described in [Arxiv](https://arxiv.org/abs/2105.04714). We provide an easy-to-use pipeline to train high efficiency face detectors with NAS supporting.

|

||||

|

||||

|

||||

## Face Alignment

|

||||

|

||||

### DenseUNet

|

||||

### Introduction

|

||||

|

||||

Please check the [Menpo](https://github.com/jiankangdeng/MenpoBenchmark) Benchmark and our [Dense U-Net](https://github.com/deepinsight/insightface/tree/master/alignment/heatmapReg) for detail. We also provide other network settings such as classic hourglass. You can find all of training code, training dataset and evaluation scripts there.

|

||||

|

||||

### CoordinateReg

|

||||

|

||||

On the other hand, in contrast to heatmap based approaches, we provide some lightweight facial landmark models with fast coordinate regression. The input of these models is loose cropped face image while the output is the direct landmark coordinates. See detail at [alignment-coordinateReg](https://github.com/deepinsight/insightface/tree/master/alignment/coordinateReg). Now only pretrained models available.

|

||||

|

||||

<div align="center">

|

||||

<img src="https://github.com/nttstar/insightface-resources/blob/master/alignment/images/t1_out.jpg" alt="imagevis" width="800">

|

||||

<div align="left">

|

||||

<img src="https://insightface.ai/assets/img/custom/thumb_sdunet.png" width="600"/>

|

||||

</div>

|

||||

|

||||

In this module, we provide datasets and training/inference pipelines for face alignment.

|

||||

|

||||

<div align="center">

|

||||

<img src="https://github.com/nttstar/insightface-resources/blob/master/alignment/images/C_jiaguo.gif" alt="videovis" width="240">

|

||||

</div>

|

||||

Supported methods:

|

||||

|

||||

- [x] [SDUNets (BMVC'2018)](alignment/heatmap)

|

||||

- [x] [SimpleRegression](alignment/coordinate_reg)

|

||||

|

||||

|

||||

[SDUNets](alignment/heatmap) is a heatmap based method which accepted on [BMVC](http://bmvc2018.org/contents/papers/0051.pdf).

|

||||

|

||||

[SimpleRegression](alignment/coordinate_reg) provides very lightweight facial landmark models with fast coordinate regression. The input of these models is loose cropped face image while the output is the direct landmark coordinates.

|

||||

|

||||

|

||||

## Citation

|

||||

@@ -312,11 +138,34 @@ For single cropped face image(112x112), total inference time is only 17ms on our

|

||||

If you find *InsightFace* useful in your research, please consider to cite the following related papers:

|

||||

|

||||

```

|

||||

@inproceedings{deng2019retinaface,

|

||||

title={RetinaFace: Single-stage Dense Face Localisation in the Wild},

|

||||

author={Deng, Jiankang and Guo, Jia and Yuxiang, Zhou and Jinke Yu and Irene Kotsia and Zafeiriou, Stefanos},

|

||||

booktitle={arxiv},

|

||||

year={2019}

|

||||

|

||||

@article{guo2021sample,

|

||||

title={Sample and Computation Redistribution for Efficient Face Detection},

|

||||

author={Guo, Jia and Deng, Jiankang and Lattas, Alexandros and Zafeiriou, Stefanos},

|

||||

journal={arXiv preprint arXiv:2105.04714},

|

||||

year={2021}

|

||||

}

|

||||

|

||||

@inproceedings{an2020partical_fc,

|

||||

title={Partial FC: Training 10 Million Identities on a Single Machine},

|

||||

author={An, Xiang and Zhu, Xuhan and Xiao, Yang and Wu, Lan and Zhang, Ming and Gao, Yuan and Qin, Bin and

|

||||

Zhang, Debing and Fu Ying},

|

||||

booktitle={Arxiv 2010.05222},

|

||||

year={2020}

|

||||

}

|

||||

|

||||

@inproceedings{deng2020subcenter,

|

||||

title={Sub-center ArcFace: Boosting Face Recognition by Large-scale Noisy Web Faces},

|

||||

author={Deng, Jiankang and Guo, Jia and Liu, Tongliang and Gong, Mingming and Zafeiriou, Stefanos},

|

||||

booktitle={Proceedings of the IEEE Conference on European Conference on Computer Vision},

|

||||

year={2020}

|

||||

}

|

||||

|

||||

@inproceedings{Deng2020CVPR,

|

||||

title = {RetinaFace: Single-Shot Multi-Level Face Localisation in the Wild},

|

||||

author = {Deng, Jiankang and Guo, Jia and Ververas, Evangelos and Kotsia, Irene and Zafeiriou, Stefanos},

|

||||

booktitle = {CVPR},

|

||||

year = {2020}

|

||||

}

|

||||

|

||||

@inproceedings{guo2018stacked,

|

||||

|

||||

@@ -1,4 +1,42 @@

|

||||

You can now find heatmap based approaches under ``heatmapReg`` directory.

|

||||

## Face Alignment

|

||||

|

||||

|

||||

<div align="left">

|

||||

<img src="https://insightface.ai/assets/img/custom/logo3.jpg" width="240"/>

|

||||

</div>

|

||||

|

||||

|

||||

## Introduction

|

||||

|

||||

These are the face alignment methods of [InsightFace](https://insightface.ai)

|

||||

|

||||

|

||||

<div align="left">

|

||||

<img src="https://insightface.ai/assets/img/custom/thumb_sdunet.png" width="600"/>

|

||||

</div>

|

||||

|

||||

|

||||

### Datasets

|

||||

|

||||

Please refer to [datasets](_datasets_) page for the details of face alignment datasets used for training and evaluation.

|

||||

|

||||

### Evaluation

|

||||

|

||||

Please refer to [evaluation](_evaluation_) page for the details of face alignment evaluation.

|

||||

|

||||

|

||||

## Methods

|

||||

|

||||

|

||||

Supported methods:

|

||||

|

||||

- [x] [SDUNets (BMVC'2018)](heatmap)

|

||||

- [x] [SimpleRegression](coordinate_reg)

|

||||

|

||||

|

||||

|

||||

## Contributing

|

||||

|

||||

We appreciate all contributions to improve the face alignment model zoo of InsightFace.

|

||||

|

||||

You can now find coordinate regression approaches under ``coordinateReg`` directory.

|

||||

|

||||

|

||||

41

attribute/README.md

Normal file

41

attribute/README.md

Normal file

@@ -0,0 +1,41 @@

|

||||

## Face Alignment

|

||||

|

||||

|

||||

<div align="left">

|

||||

<img src="https://insightface.ai/assets/img/custom/logo3.jpg" width="320"/>

|

||||

</div>

|

||||

|

||||

|

||||

## Introduction

|

||||

|

||||

These are the face attribute methods of [InsightFace](https://insightface.ai)

|

||||

|

||||

|

||||

<div align="left">

|

||||

<img src="https://insightface.ai/assets/img/github/t1_genderage.jpg" width="600"/>

|

||||

</div>

|

||||

|

||||

|

||||

### Datasets

|

||||

|

||||

Please refer to [datasets](_datasets_) page for the details of face attribute datasets used for training and evaluation.

|

||||

|

||||

### Evaluation

|

||||

|

||||

Please refer to [evaluation](_evaluation_) page for the details of face attribute evaluation.

|

||||

|

||||

|

||||

## Methods

|

||||

|

||||

|

||||

Supported methods:

|

||||

|

||||

- [x] [Gender_Age](gender_age)

|

||||

|

||||

|

||||

|

||||

## Contributing

|

||||

|

||||

We appreciate all contributions to improve the face attribute model zoo of InsightFace.

|

||||

|

||||

|

||||

@@ -4,25 +4,21 @@ import sys

|

||||

import numpy as np

|

||||

import insightface

|

||||

from insightface.app import FaceAnalysis

|

||||

from insightface.data import get_image as ins_get_image

|

||||

|

||||

assert insightface.__version__>='0.2'

|

||||

|

||||

parser = argparse.ArgumentParser(description='insightface test')

|

||||

parser = argparse.ArgumentParser(description='insightface gender-age test')

|

||||

# general

|

||||

parser.add_argument('--ctx', default=0, type=int, help='ctx id, <0 means using cpu')

|

||||

args = parser.parse_args()

|

||||

|

||||

app = FaceAnalysis(name='antelope')

|

||||

app = FaceAnalysis(allowed_modules=['detection', 'genderage'])

|

||||

app.prepare(ctx_id=args.ctx, det_size=(640,640))

|

||||

|

||||

img = cv2.imread('../sample-images/t1.jpg')

|

||||

img = ins_get_image('t1')

|

||||

faces = app.get(img)

|

||||

assert len(faces)==6

|

||||

rimg = app.draw_on(img, faces)

|

||||

cv2.imwrite("./t1_output.jpg", rimg)

|

||||

print(len(faces))

|

||||

for face in faces:

|

||||

print(face.bbox)

|

||||

print(face.kps)

|

||||

print(face.embedding.shape)

|

||||

print(face.sex, face.age)

|

||||

|

||||

31

challenges/README.md

Normal file

31

challenges/README.md

Normal file

@@ -0,0 +1,31 @@

|

||||

## Challenges

|

||||

|

||||

|

||||

<div align="left">

|

||||

<img src="https://insightface.ai/assets/img/custom/logo3.jpg" width="240"/>

|

||||

</div>

|

||||

|

||||

|

||||

## Introduction

|

||||

|

||||

These are challenges hold by [InsightFace](https://insightface.ai)

|

||||

|

||||

|

||||

<div align="left">

|

||||

<img src="https://insightface.ai/assets/img/custom/thumb_ifrt.png" width="480"/>

|

||||

</div>

|

||||

|

||||

|

||||

|

||||

## List

|

||||

|

||||

|

||||

Supported methods:

|

||||

|

||||

- [LFR19 (ICCVW'2019)](iccv19-lfr)

|

||||

- [MFR21 (ICCVW'2021)](iccv21-mfr)

|

||||

- [IFRT](ifrt)

|

||||

|

||||

|

||||

|

||||

|

||||

@@ -31,7 +31,7 @@ insightface.challenge@gmail.com

|

||||

|

||||

*For Chinese:*

|

||||

|

||||

|

||||

|

||||

|

||||

*For English:*

|

||||

|

||||

|

||||

@@ -1,8 +0,0 @@

|

||||

InsightFace deployment README

|

||||

---

|

||||

|

||||

For insightface pip-package <= 0.1.5, we use MXNet as inference backend, please download all models from [onedrive](https://1drv.ms/u/s!AswpsDO2toNKrUy0VktHTWgIQ0bn?e=UEF7C4), and put them all under `~/.insightface/models/` directory.

|

||||

|

||||

Starting from insightface>=0.2, we use onnxruntime as inference backend, please download our **antelope** model release from [onedrive](https://1drv.ms/u/s!AswpsDO2toNKrU0ydGgDkrHPdJ3m?e=iVgZox), and put it under `~/.insightface/models/`, so there're onnx models at `~/.insightface/models/antelope/*.onnx`.

|

||||

|

||||

The **antelope** model release contains `ResNet100@Glint360K recognition model` and `SCRFD-10GF detection model`. Please check `test.py` for detail.

|

||||

@@ -1,40 +0,0 @@

|

||||

import sys

|

||||

import os

|

||||

import argparse

|

||||

import onnx

|

||||

import mxnet as mx

|

||||

|

||||

print('mxnet version:', mx.__version__)

|

||||

print('onnx version:', onnx.__version__)

|

||||

#make sure to install onnx-1.2.1

|

||||

#pip uninstall onnx

|

||||

#pip install onnx==1.2.1

|

||||

assert onnx.__version__ == '1.2.1'

|

||||

import numpy as np

|

||||

from mxnet.contrib import onnx as onnx_mxnet

|

||||

|

||||

parser = argparse.ArgumentParser(

|

||||

description='convert insightface models to onnx')

|

||||

# general

|

||||

parser.add_argument('--prefix',

|

||||

default='./r100-arcface/model',

|

||||

help='prefix to load model.')

|

||||

parser.add_argument('--epoch',

|

||||

default=0,

|

||||

type=int,

|

||||

help='epoch number to load model.')

|

||||

parser.add_argument('--input-shape', default='3,112,112', help='input shape.')

|

||||

parser.add_argument('--output-onnx',

|

||||

default='./r100.onnx',

|

||||

help='path to write onnx model.')

|

||||

args = parser.parse_args()

|

||||

input_shape = (1, ) + tuple([int(x) for x in args.input_shape.split(',')])

|

||||

print('input-shape:', input_shape)

|

||||

|

||||

sym_file = "%s-symbol.json" % args.prefix

|

||||

params_file = "%s-%04d.params" % (args.prefix, args.epoch)

|

||||

assert os.path.exists(sym_file)

|

||||

assert os.path.exists(params_file)

|

||||

converted_model_path = onnx_mxnet.export_model(sym_file, params_file,

|

||||

[input_shape], np.float32,

|

||||

args.output_onnx)

|

||||

@@ -1,67 +0,0 @@

|

||||

from __future__ import absolute_import

|

||||

from __future__ import division

|

||||

from __future__ import print_function

|

||||

|

||||

import sys

|

||||

import os

|

||||

import argparse

|

||||

import numpy as np

|

||||

import mxnet as mx

|

||||

import cv2

|

||||

import insightface

|

||||

from insightface.utils import face_align

|

||||

|

||||

|

||||

def do_flip(data):

|

||||

for idx in range(data.shape[0]):

|

||||

data[idx, :, :] = np.fliplr(data[idx, :, :])

|

||||

|

||||

|

||||

def get_model(ctx, image_size, prefix, epoch, layer):

|

||||

print('loading', prefix, epoch)

|

||||

sym, arg_params, aux_params = mx.model.load_checkpoint(prefix, epoch)

|

||||

all_layers = sym.get_internals()

|

||||

sym = all_layers[layer + '_output']

|

||||

model = mx.mod.Module(symbol=sym, context=ctx, label_names=None)

|

||||

#model.bind(data_shapes=[('data', (args.batch_size, 3, image_size[0], image_size[1]))], label_shapes=[('softmax_label', (args.batch_size,))])

|

||||

model.bind(data_shapes=[('data', (1, 3, image_size[0], image_size[1]))])

|

||||

model.set_params(arg_params, aux_params)

|

||||

return model

|

||||

|

||||

|

||||

class FaceModel:

|

||||

def __init__(self, ctx_id, model_prefix, model_epoch, use_large_detector=False):

|

||||

if use_large_detector:

|

||||

self.detector = insightface.model_zoo.get_model('retinaface_r50_v1')

|

||||

else:

|

||||

self.detector = insightface.model_zoo.get_model('retinaface_mnet025_v2')

|

||||

self.detector.prepare(ctx_id=ctx_id)

|

||||

if ctx_id>=0:

|

||||

ctx = mx.gpu(ctx_id)

|

||||

else:

|

||||

ctx = mx.cpu()

|

||||

image_size = (112,112)

|

||||

self.model = get_model(ctx, image_size, model_prefix, model_epoch, 'fc1')

|

||||

self.image_size = image_size

|

||||

|

||||

def get_input(self, face_img):

|

||||

bbox, pts5 = self.detector.detect(face_img, threshold=0.8)

|

||||

if bbox.shape[0]==0:

|

||||

return None

|

||||

bbox = bbox[0, 0:4]

|

||||

pts5 = pts5[0, :]

|

||||

nimg = face_align.norm_crop(face_img, pts5)

|

||||

return nimg

|

||||

|

||||

def get_feature(self, aligned):

|

||||

a = cv2.cvtColor(aligned, cv2.COLOR_BGR2RGB)

|

||||

a = np.transpose(a, (2, 0, 1))

|

||||

input_blob = np.expand_dims(a, axis=0)

|

||||

data = mx.nd.array(input_blob)

|

||||

db = mx.io.DataBatch(data=(data, ))

|

||||

self.model.forward(db, is_train=False)

|

||||

emb = self.model.get_outputs()[0].asnumpy()[0]

|

||||

norm = np.sqrt(np.sum(emb*emb)+0.00001)

|

||||

emb /= norm

|

||||

return emb

|

||||

|

||||

@@ -1,32 +0,0 @@

|

||||

from __future__ import absolute_import

|

||||

from __future__ import division

|

||||

from __future__ import print_function

|

||||

|

||||

import sys

|

||||

import os

|

||||

import argparse

|

||||

import numpy as np

|

||||

import mxnet as mx

|

||||

|

||||

parser = argparse.ArgumentParser(description='face model slim')

|

||||

# general

|

||||

parser.add_argument('--model',

|

||||

default='../models/model-r34-amf/model,60',

|

||||

help='path to load model.')

|

||||

args = parser.parse_args()

|

||||

|

||||

_vec = args.model.split(',')

|

||||

assert len(_vec) == 2

|

||||

prefix = _vec[0]

|

||||

epoch = int(_vec[1])

|

||||

print('loading', prefix, epoch)

|

||||

sym, arg_params, aux_params = mx.model.load_checkpoint(prefix, epoch)

|

||||

all_layers = sym.get_internals()

|

||||

sym = all_layers['fc1_output']

|

||||

dellist = []

|

||||

for k, v in arg_params.iteritems():

|

||||

if k.startswith('fc7'):

|

||||

dellist.append(k)

|

||||

for d in dellist:

|

||||

del arg_params[d]

|

||||

mx.model.save_checkpoint(prefix + "s", 0, sym, arg_params, aux_params)

|

||||

Binary file not shown.

@@ -1,266 +0,0 @@

|

||||

{

|

||||

"nodes": [

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "data",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv1_weight",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv1_bias",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "Convolution",

|

||||

"param": {

|

||||

"cudnn_off": "False",

|

||||

"cudnn_tune": "off",

|

||||

"dilate": "(1,1)",

|

||||

"kernel": "(3,3)",

|

||||

"no_bias": "False",

|

||||

"num_filter": "10",

|

||||

"num_group": "1",

|

||||

"pad": "(0,0)",

|

||||

"stride": "(1,1)",

|

||||

"workspace": "1024"

|

||||

},

|

||||

"name": "conv1",

|

||||

"inputs": [[0, 0], [1, 0], [2, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "prelu1_gamma",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "LeakyReLU",

|

||||

"param": {

|

||||

"act_type": "prelu",

|

||||

"lower_bound": "0.125",

|

||||

"slope": "0.25",

|

||||

"upper_bound": "0.334"

|

||||

},

|

||||

"name": "prelu1",

|

||||

"inputs": [[3, 0], [4, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "Pooling",

|

||||

"param": {

|

||||

"global_pool": "False",

|

||||

"kernel": "(2,2)",

|

||||

"pad": "(0,0)",

|

||||

"pool_type": "max",

|

||||

"pooling_convention": "full",

|

||||

"stride": "(2,2)"

|

||||

},

|

||||

"name": "pool1",

|

||||

"inputs": [[5, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv2_weight",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv2_bias",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "Convolution",

|

||||

"param": {

|

||||

"cudnn_off": "False",

|

||||

"cudnn_tune": "off",

|

||||

"dilate": "(1,1)",

|

||||

"kernel": "(3,3)",

|

||||

"no_bias": "False",

|

||||

"num_filter": "16",

|

||||

"num_group": "1",

|

||||

"pad": "(0,0)",

|

||||

"stride": "(1,1)",

|

||||

"workspace": "1024"

|

||||

},

|

||||

"name": "conv2",

|

||||

"inputs": [[6, 0], [7, 0], [8, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "prelu2_gamma",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "LeakyReLU",

|

||||

"param": {

|

||||

"act_type": "prelu",

|

||||

"lower_bound": "0.125",

|

||||

"slope": "0.25",

|

||||

"upper_bound": "0.334"

|

||||

},

|

||||

"name": "prelu2",

|

||||

"inputs": [[9, 0], [10, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv3_weight",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv3_bias",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "Convolution",

|

||||

"param": {

|

||||

"cudnn_off": "False",

|

||||

"cudnn_tune": "off",

|

||||

"dilate": "(1,1)",

|

||||

"kernel": "(3,3)",

|

||||

"no_bias": "False",

|

||||

"num_filter": "32",

|

||||

"num_group": "1",

|

||||

"pad": "(0,0)",

|

||||

"stride": "(1,1)",

|

||||

"workspace": "1024"

|

||||

},

|

||||

"name": "conv3",

|

||||

"inputs": [[11, 0], [12, 0], [13, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "prelu3_gamma",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "LeakyReLU",

|

||||

"param": {

|

||||

"act_type": "prelu",

|

||||

"lower_bound": "0.125",

|

||||

"slope": "0.25",

|

||||

"upper_bound": "0.334"

|

||||

},

|

||||

"name": "prelu3",

|

||||

"inputs": [[14, 0], [15, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv4_2_weight",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv4_2_bias",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "Convolution",

|

||||

"param": {

|

||||

"cudnn_off": "False",

|

||||

"cudnn_tune": "off",

|

||||

"dilate": "(1,1)",

|

||||

"kernel": "(1,1)",

|

||||

"no_bias": "False",

|

||||

"num_filter": "4",

|

||||

"num_group": "1",

|

||||

"pad": "(0,0)",

|

||||

"stride": "(1,1)",

|

||||

"workspace": "1024"

|

||||

},

|

||||

"name": "conv4_2",

|

||||

"inputs": [[16, 0], [17, 0], [18, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv4_1_weight",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv4_1_bias",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "Convolution",

|

||||

"param": {

|

||||

"cudnn_off": "False",

|

||||

"cudnn_tune": "off",

|

||||

"dilate": "(1,1)",

|

||||

"kernel": "(1,1)",

|

||||

"no_bias": "False",

|

||||

"num_filter": "2",

|

||||

"num_group": "1",

|

||||

"pad": "(0,0)",

|

||||

"stride": "(1,1)",

|

||||

"workspace": "1024"

|

||||

},

|

||||

"name": "conv4_1",

|

||||

"inputs": [[16, 0], [20, 0], [21, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "SoftmaxActivation",

|

||||

"param": {"mode": "channel"},

|

||||

"name": "prob1",

|

||||

"inputs": [[22, 0]],

|

||||

"backward_source_id": -1

|

||||

}

|

||||

],

|

||||

"arg_nodes": [

|

||||

0,

|

||||

1,

|

||||

2,

|

||||

4,

|

||||

7,

|

||||

8,

|

||||

10,

|

||||

12,

|

||||

13,

|

||||

15,

|

||||

17,

|

||||

18,

|

||||

20,

|

||||

21

|

||||

],

|

||||

"heads": [[19, 0], [23, 0]]

|

||||

}

|

||||

Binary file not shown.

@@ -1,177 +0,0 @@

|

||||

name: "PNet"

|

||||

input: "data"

|

||||

input_dim: 1

|

||||

input_dim: 3

|

||||

input_dim: 12

|

||||

input_dim: 12

|

||||

|

||||

layer {

|

||||

name: "conv1"

|

||||

type: "Convolution"

|

||||

bottom: "data"

|

||||

top: "conv1"

|

||||

param {

|

||||

lr_mult: 1

|

||||

decay_mult: 1

|

||||

}

|

||||

param {

|

||||

lr_mult: 2

|

||||

decay_mult: 0

|

||||

}

|

||||

convolution_param {

|

||||

num_output: 10

|

||||

kernel_size: 3

|

||||

stride: 1

|

||||

weight_filler {

|

||||

type: "xavier"

|

||||

}

|

||||

bias_filler {

|

||||

type: "constant"

|

||||

value: 0

|

||||

}

|

||||

}

|

||||

}

|

||||

layer {

|

||||

name: "PReLU1"

|

||||

type: "PReLU"

|

||||

bottom: "conv1"

|

||||

top: "conv1"

|

||||

}

|

||||

layer {

|

||||

name: "pool1"

|

||||

type: "Pooling"

|

||||

bottom: "conv1"

|

||||

top: "pool1"

|

||||

pooling_param {

|

||||

pool: MAX

|

||||

kernel_size: 2

|

||||

stride: 2

|

||||

}

|

||||

}

|

||||

|

||||

layer {

|

||||

name: "conv2"

|

||||

type: "Convolution"

|

||||

bottom: "pool1"

|

||||

top: "conv2"

|

||||

param {

|

||||

lr_mult: 1

|

||||

decay_mult: 1

|

||||

}

|

||||

param {

|

||||

lr_mult: 2

|

||||

decay_mult: 0

|

||||

}

|

||||

convolution_param {

|

||||

num_output: 16

|

||||

kernel_size: 3

|

||||

stride: 1

|

||||

weight_filler {

|

||||

type: "xavier"

|

||||

}

|

||||

bias_filler {

|

||||

type: "constant"

|

||||

value: 0

|

||||

}

|

||||

}

|

||||

}

|

||||

layer {

|

||||

name: "PReLU2"

|

||||

type: "PReLU"

|

||||

bottom: "conv2"

|

||||

top: "conv2"

|

||||

}

|

||||

|

||||

layer {

|

||||

name: "conv3"

|

||||

type: "Convolution"

|

||||

bottom: "conv2"

|

||||

top: "conv3"

|

||||

param {

|

||||

lr_mult: 1

|

||||

decay_mult: 1

|

||||

}

|

||||

param {

|

||||

lr_mult: 2

|

||||

decay_mult: 0

|

||||

}

|

||||

convolution_param {

|

||||

num_output: 32

|

||||

kernel_size: 3

|

||||

stride: 1

|

||||

weight_filler {

|

||||

type: "xavier"

|

||||

}

|

||||

bias_filler {

|

||||

type: "constant"

|

||||

value: 0

|

||||

}

|

||||

}

|

||||

}

|

||||

layer {

|

||||

name: "PReLU3"

|

||||

type: "PReLU"

|

||||

bottom: "conv3"

|

||||

top: "conv3"

|

||||

}

|

||||

|

||||

|

||||

layer {

|

||||

name: "conv4-1"

|

||||

type: "Convolution"

|

||||

bottom: "conv3"

|

||||

top: "conv4-1"

|

||||

param {

|

||||

lr_mult: 1

|

||||

decay_mult: 1

|

||||

}

|

||||

param {

|

||||

lr_mult: 2

|

||||

decay_mult: 0

|

||||

}

|

||||

convolution_param {

|

||||

num_output: 2

|

||||

kernel_size: 1

|

||||

stride: 1

|

||||

weight_filler {

|

||||

type: "xavier"

|

||||

}

|

||||

bias_filler {

|

||||

type: "constant"

|

||||

value: 0

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

layer {

|

||||

name: "conv4-2"

|

||||

type: "Convolution"

|

||||

bottom: "conv3"

|

||||

top: "conv4-2"

|

||||

param {

|

||||

lr_mult: 1

|

||||

decay_mult: 1

|

||||

}

|

||||

param {

|

||||

lr_mult: 2

|

||||

decay_mult: 0

|

||||

}

|

||||

convolution_param {

|

||||

num_output: 4

|

||||

kernel_size: 1

|

||||

stride: 1

|

||||

weight_filler {

|

||||

type: "xavier"

|

||||

}

|

||||

bias_filler {

|

||||

type: "constant"

|

||||

value: 0

|

||||

}

|

||||

}

|

||||

}

|

||||

layer {

|

||||

name: "prob1"

|

||||

type: "Softmax"

|

||||

bottom: "conv4-1"

|

||||

top: "prob1"

|

||||

}

|

||||

Binary file not shown.

@@ -1,324 +0,0 @@

|

||||

{

|

||||

"nodes": [

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "data",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv1_weight",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv1_bias",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "Convolution",

|

||||

"param": {

|

||||

"cudnn_off": "False",

|

||||

"cudnn_tune": "off",

|

||||

"dilate": "(1,1)",

|

||||

"kernel": "(3,3)",

|

||||

"no_bias": "False",

|

||||

"num_filter": "28",

|

||||

"num_group": "1",

|

||||

"pad": "(0,0)",

|

||||

"stride": "(1,1)",

|

||||

"workspace": "1024"

|

||||

},

|

||||

"name": "conv1",

|

||||

"inputs": [[0, 0], [1, 0], [2, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "prelu1_gamma",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "LeakyReLU",

|

||||

"param": {

|

||||

"act_type": "prelu",

|

||||

"lower_bound": "0.125",

|

||||

"slope": "0.25",

|

||||

"upper_bound": "0.334"

|

||||

},

|

||||

"name": "prelu1",

|

||||

"inputs": [[3, 0], [4, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "Pooling",

|

||||

"param": {

|

||||

"global_pool": "False",

|

||||

"kernel": "(3,3)",

|

||||

"pad": "(0,0)",

|

||||

"pool_type": "max",

|

||||

"pooling_convention": "full",

|

||||

"stride": "(2,2)"

|

||||

},

|

||||

"name": "pool1",

|

||||

"inputs": [[5, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv2_weight",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv2_bias",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "Convolution",

|

||||

"param": {

|

||||

"cudnn_off": "False",

|

||||

"cudnn_tune": "off",

|

||||

"dilate": "(1,1)",

|

||||

"kernel": "(3,3)",

|

||||

"no_bias": "False",

|

||||

"num_filter": "48",

|

||||

"num_group": "1",

|

||||

"pad": "(0,0)",

|

||||

"stride": "(1,1)",

|

||||

"workspace": "1024"

|

||||

},

|

||||

"name": "conv2",

|

||||

"inputs": [[6, 0], [7, 0], [8, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "prelu2_gamma",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "LeakyReLU",

|

||||

"param": {

|

||||

"act_type": "prelu",

|

||||

"lower_bound": "0.125",

|

||||

"slope": "0.25",

|

||||

"upper_bound": "0.334"

|

||||

},

|

||||

"name": "prelu2",

|

||||

"inputs": [[9, 0], [10, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "Pooling",

|

||||

"param": {

|

||||

"global_pool": "False",

|

||||

"kernel": "(3,3)",

|

||||

"pad": "(0,0)",

|

||||

"pool_type": "max",

|

||||

"pooling_convention": "full",

|

||||

"stride": "(2,2)"

|

||||

},

|

||||

"name": "pool2",

|

||||

"inputs": [[11, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv3_weight",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv3_bias",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "Convolution",

|

||||

"param": {

|

||||

"cudnn_off": "False",

|

||||

"cudnn_tune": "off",

|

||||

"dilate": "(1,1)",

|

||||

"kernel": "(2,2)",

|

||||

"no_bias": "False",

|

||||

"num_filter": "64",

|

||||

"num_group": "1",

|

||||

"pad": "(0,0)",

|

||||

"stride": "(1,1)",

|

||||

"workspace": "1024"

|

||||

},

|

||||

"name": "conv3",

|

||||

"inputs": [[12, 0], [13, 0], [14, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "prelu3_gamma",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "LeakyReLU",

|

||||

"param": {

|

||||

"act_type": "prelu",

|

||||

"lower_bound": "0.125",

|

||||

"slope": "0.25",

|

||||

"upper_bound": "0.334"

|

||||

},

|

||||

"name": "prelu3",

|

||||

"inputs": [[15, 0], [16, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv4_weight",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv4_bias",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "FullyConnected",

|

||||

"param": {

|

||||

"no_bias": "False",

|

||||

"num_hidden": "128"

|

||||

},

|

||||

"name": "conv4",

|

||||

"inputs": [[17, 0], [18, 0], [19, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "prelu4_gamma",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "LeakyReLU",

|

||||

"param": {

|

||||

"act_type": "prelu",

|

||||

"lower_bound": "0.125",

|

||||

"slope": "0.25",

|

||||

"upper_bound": "0.334"

|

||||

},

|

||||

"name": "prelu4",

|

||||

"inputs": [[20, 0], [21, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv5_2_weight",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv5_2_bias",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "FullyConnected",

|

||||

"param": {

|

||||

"no_bias": "False",

|

||||

"num_hidden": "4"

|

||||

},

|

||||

"name": "conv5_2",

|

||||

"inputs": [[22, 0], [23, 0], [24, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv5_1_weight",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "conv5_1_bias",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "FullyConnected",

|

||||

"param": {

|

||||

"no_bias": "False",

|

||||

"num_hidden": "2"

|

||||

},

|

||||

"name": "conv5_1",

|

||||

"inputs": [[22, 0], [26, 0], [27, 0]],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "null",

|

||||

"param": {},

|

||||

"name": "prob1_label",

|

||||

"inputs": [],

|

||||

"backward_source_id": -1

|

||||

},

|

||||

{

|

||||

"op": "SoftmaxOutput",

|

||||

"param": {

|

||||

"grad_scale": "1",

|

||||

"ignore_label": "-1",

|

||||

"multi_output": "False",

|

||||

"normalization": "null",

|

||||

"use_ignore": "False"

|

||||

},

|

||||

"name": "prob1",

|

||||

"inputs": [[28, 0], [29, 0]],

|

||||

"backward_source_id": -1

|

||||

}

|

||||

],

|

||||

"arg_nodes": [

|

||||

0,

|

||||

1,

|

||||

2,

|

||||

4,

|

||||

7,

|

||||

8,

|

||||

10,

|

||||

13,

|

||||

14,

|

||||

16,

|

||||

18,

|

||||

19,

|

||||

21,

|

||||

23,

|

||||

24,

|

||||

26,

|

||||

27,

|

||||

29

|

||||

],

|

||||

"heads": [[25, 0], [30, 0]]

|

||||

}

|

||||

Binary file not shown.

@@ -1,228 +0,0 @@

|

||||

name: "RNet"

|

||||

input: "data"

|

||||

input_dim: 1

|

||||

input_dim: 3

|

||||

input_dim: 24

|

||||

input_dim: 24

|

||||

|

||||

|

||||

##########################

|

||||

######################

|

||||

layer {

|

||||

name: "conv1"

|

||||

type: "Convolution"

|

||||

bottom: "data"

|

||||

top: "conv1"

|

||||

param {

|

||||

lr_mult: 0

|

||||

decay_mult: 0

|

||||

}

|

||||

param {

|

||||

lr_mult: 0

|

||||

decay_mult: 0

|

||||

}

|

||||

convolution_param {

|

||||

num_output: 28

|

||||

kernel_size: 3

|

||||

stride: 1

|

||||

weight_filler {

|

||||

type: "xavier"

|

||||

}

|

||||

bias_filler {

|

||||

type: "constant"

|

||||

value: 0

|

||||

}

|

||||

}

|

||||

}

|

||||

layer {

|

||||

name: "prelu1"

|

||||

type: "PReLU"

|

||||

bottom: "conv1"

|

||||

top: "conv1"

|

||||

propagate_down: true

|

||||

}

|

||||

layer {

|

||||

name: "pool1"

|

||||

type: "Pooling"

|

||||

bottom: "conv1"

|

||||

top: "pool1"

|

||||

pooling_param {

|

||||

pool: MAX

|

||||

kernel_size: 3

|

||||

stride: 2

|

||||

}

|

||||

}

|

||||

|

||||

layer {

|

||||

name: "conv2"

|

||||

type: "Convolution"

|

||||

bottom: "pool1"

|

||||

top: "conv2"

|

||||

param {

|

||||

lr_mult: 0

|

||||

decay_mult: 0

|

||||

}

|

||||

param {

|

||||

lr_mult: 0

|

||||

decay_mult: 0

|

||||

}

|

||||

convolution_param {

|

||||

num_output: 48

|

||||

kernel_size: 3

|

||||

stride: 1

|

||||

weight_filler {

|

||||

type: "xavier"

|

||||

}

|

||||

bias_filler {

|

||||

type: "constant"

|

||||

value: 0

|

||||

}

|

||||

}

|

||||

}

|

||||

layer {

|

||||

name: "prelu2"

|

||||

type: "PReLU"

|

||||