-

+

+

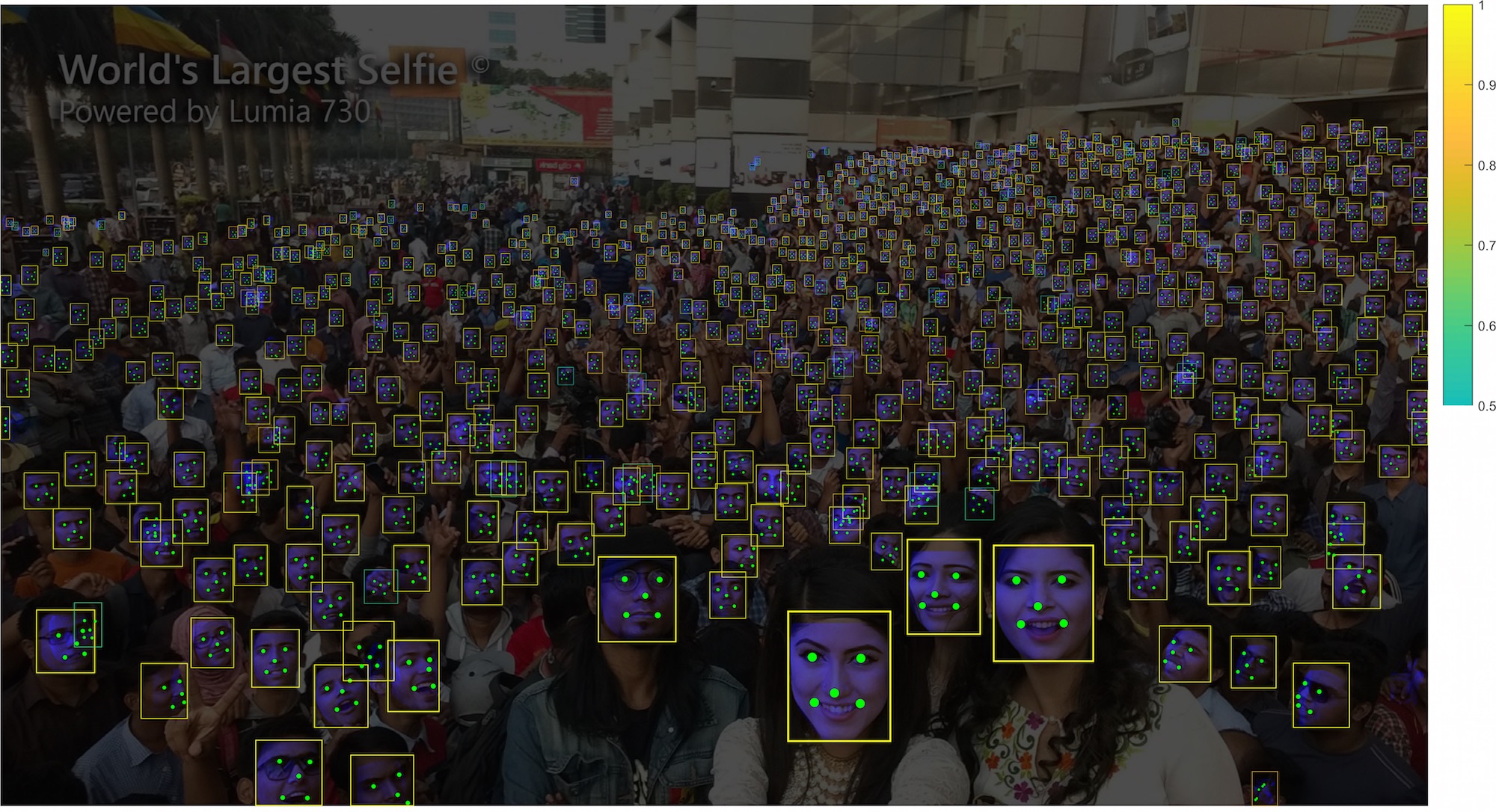

+In this module, we provide datasets and training/inference pipelines for face alignment.

-

-

-

+Supported methods:

+

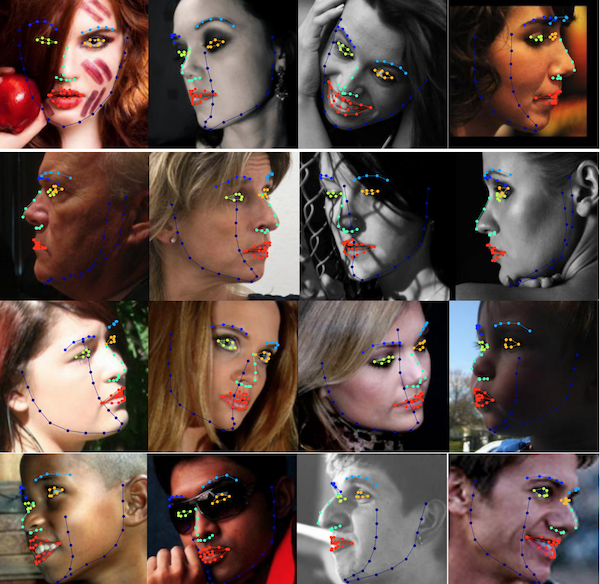

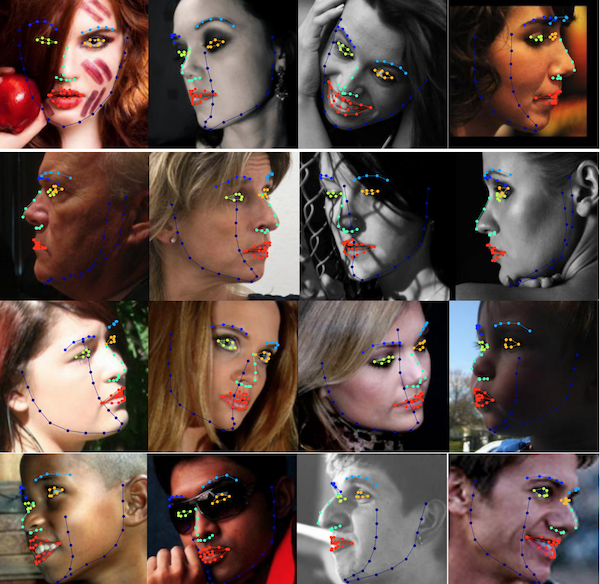

+- [x] [SDUNets (BMVC'2018)](alignment/heatmap)

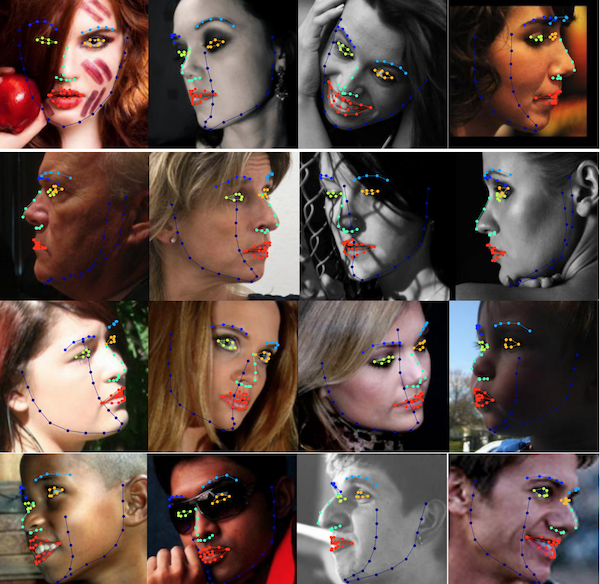

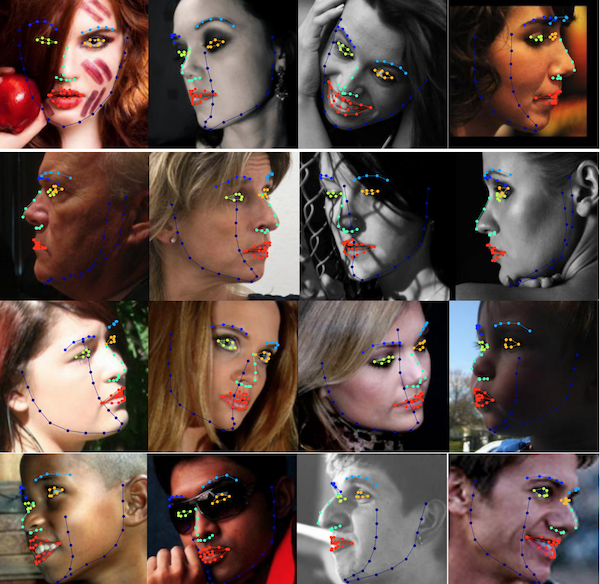

+- [x] [SimpleRegression](alignment/coordinate_reg)

+

+

+[SDUNets](alignment/heatmap) is a heatmap based method which accepted on [BMVC](http://bmvc2018.org/contents/papers/0051.pdf).

+

+[SimpleRegression](alignment/coordinate_reg) provides very lightweight facial landmark models with fast coordinate regression. The input of these models is loose cropped face image while the output is the direct landmark coordinates.

## Citation

@@ -312,11 +138,34 @@ For single cropped face image(112x112), total inference time is only 17ms on our

If you find *InsightFace* useful in your research, please consider to cite the following related papers:

```

-@inproceedings{deng2019retinaface,

-title={RetinaFace: Single-stage Dense Face Localisation in the Wild},

-author={Deng, Jiankang and Guo, Jia and Yuxiang, Zhou and Jinke Yu and Irene Kotsia and Zafeiriou, Stefanos},

-booktitle={arxiv},

-year={2019}

+

+@article{guo2021sample,

+ title={Sample and Computation Redistribution for Efficient Face Detection},

+ author={Guo, Jia and Deng, Jiankang and Lattas, Alexandros and Zafeiriou, Stefanos},

+ journal={arXiv preprint arXiv:2105.04714},

+ year={2021}

+}

+

+@inproceedings{an2020partical_fc,

+ title={Partial FC: Training 10 Million Identities on a Single Machine},

+ author={An, Xiang and Zhu, Xuhan and Xiao, Yang and Wu, Lan and Zhang, Ming and Gao, Yuan and Qin, Bin and

+ Zhang, Debing and Fu Ying},

+ booktitle={Arxiv 2010.05222},

+ year={2020}

+}

+

+@inproceedings{deng2020subcenter,

+ title={Sub-center ArcFace: Boosting Face Recognition by Large-scale Noisy Web Faces},

+ author={Deng, Jiankang and Guo, Jia and Liu, Tongliang and Gong, Mingming and Zafeiriou, Stefanos},

+ booktitle={Proceedings of the IEEE Conference on European Conference on Computer Vision},

+ year={2020}

+}

+

+@inproceedings{Deng2020CVPR,

+title = {RetinaFace: Single-Shot Multi-Level Face Localisation in the Wild},

+author = {Deng, Jiankang and Guo, Jia and Ververas, Evangelos and Kotsia, Irene and Zafeiriou, Stefanos},

+booktitle = {CVPR},

+year = {2020}

}

@inproceedings{guo2018stacked,

diff --git a/alignment/README.md b/alignment/README.md

index 9656d13..7b3706b 100644

--- a/alignment/README.md

+++ b/alignment/README.md

@@ -1,4 +1,42 @@

-You can now find heatmap based approaches under ``heatmapReg`` directory.

+## Face Alignment

+

+

+

+

+

+

+

+## Introduction

+

+These are the face alignment methods of [InsightFace](https://insightface.ai)

+

+

+

+

+

+

+

+### Datasets

+

+ Please refer to [datasets](_datasets_) page for the details of face alignment datasets used for training and evaluation.

+

+### Evaluation

+

+ Please refer to [evaluation](_evaluation_) page for the details of face alignment evaluation.

+

+

+## Methods

+

+

+Supported methods:

+

+- [x] [SDUNets (BMVC'2018)](heatmap)

+- [x] [SimpleRegression](coordinate_reg)

+

+

+

+## Contributing

+

+We appreciate all contributions to improve the face alignment model zoo of InsightFace.

-You can now find coordinate regression approaches under ``coordinateReg`` directory.

diff --git a/alignment/coordinateReg/README.md b/alignment/coordinate_reg/README.md

similarity index 100%

rename from alignment/coordinateReg/README.md

rename to alignment/coordinate_reg/README.md

diff --git a/alignment/coordinateReg/image_infer.py b/alignment/coordinate_reg/image_infer.py

similarity index 100%

rename from alignment/coordinateReg/image_infer.py

rename to alignment/coordinate_reg/image_infer.py

diff --git a/alignment/heatmapReg/README.md b/alignment/heatmap/README.md

similarity index 100%

rename from alignment/heatmapReg/README.md

rename to alignment/heatmap/README.md

diff --git a/alignment/heatmapReg/data.py b/alignment/heatmap/data.py

similarity index 100%

rename from alignment/heatmapReg/data.py

rename to alignment/heatmap/data.py

diff --git a/alignment/heatmapReg/img_helper.py b/alignment/heatmap/img_helper.py

similarity index 100%

rename from alignment/heatmapReg/img_helper.py

rename to alignment/heatmap/img_helper.py

diff --git a/alignment/heatmapReg/metric.py b/alignment/heatmap/metric.py

similarity index 100%

rename from alignment/heatmapReg/metric.py

rename to alignment/heatmap/metric.py

diff --git a/alignment/heatmapReg/optimizer.py b/alignment/heatmap/optimizer.py

similarity index 100%

rename from alignment/heatmapReg/optimizer.py

rename to alignment/heatmap/optimizer.py

diff --git a/alignment/heatmapReg/sample_config.py b/alignment/heatmap/sample_config.py

similarity index 100%

rename from alignment/heatmapReg/sample_config.py

rename to alignment/heatmap/sample_config.py

diff --git a/alignment/heatmapReg/symbol/sym_heatmap.py b/alignment/heatmap/symbol/sym_heatmap.py

similarity index 100%

rename from alignment/heatmapReg/symbol/sym_heatmap.py

rename to alignment/heatmap/symbol/sym_heatmap.py

diff --git a/alignment/heatmapReg/test.py b/alignment/heatmap/test.py

similarity index 100%

rename from alignment/heatmapReg/test.py

rename to alignment/heatmap/test.py

diff --git a/alignment/heatmapReg/test_rec_nme.py b/alignment/heatmap/test_rec_nme.py

similarity index 100%

rename from alignment/heatmapReg/test_rec_nme.py

rename to alignment/heatmap/test_rec_nme.py

diff --git a/alignment/heatmapReg/train.py b/alignment/heatmap/train.py

similarity index 100%

rename from alignment/heatmapReg/train.py

rename to alignment/heatmap/train.py

diff --git a/attribute/README.md b/attribute/README.md

new file mode 100644

index 0000000..1a8379c

--- /dev/null

+++ b/attribute/README.md

@@ -0,0 +1,41 @@

+## Face Alignment

+

+

+

+

+

+

+

+## Introduction

+

+These are the face attribute methods of [InsightFace](https://insightface.ai)

+

+

+

+

+

+

+

+### Datasets

+

+ Please refer to [datasets](_datasets_) page for the details of face attribute datasets used for training and evaluation.

+

+### Evaluation

+

+ Please refer to [evaluation](_evaluation_) page for the details of face attribute evaluation.

+

+

+## Methods

+

+

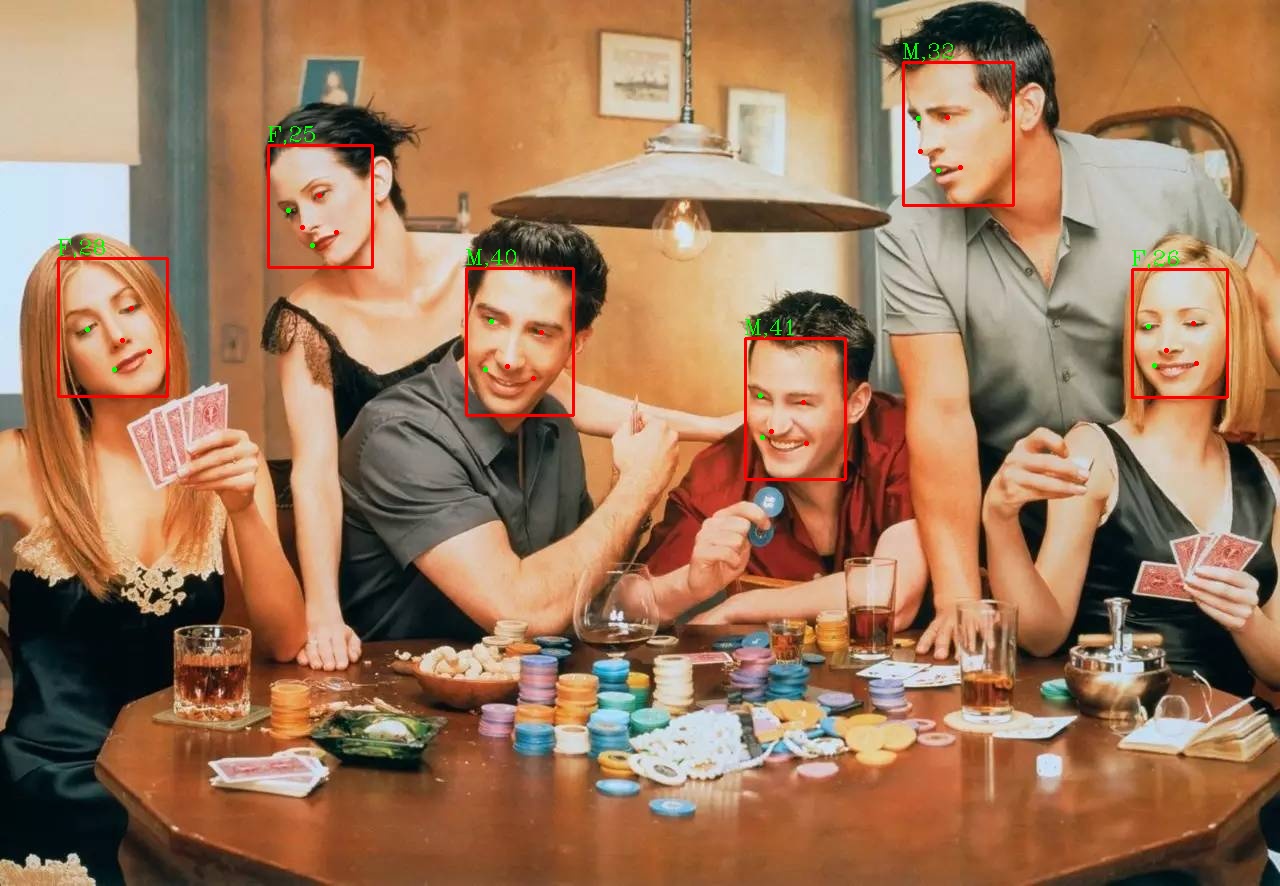

+Supported methods:

+

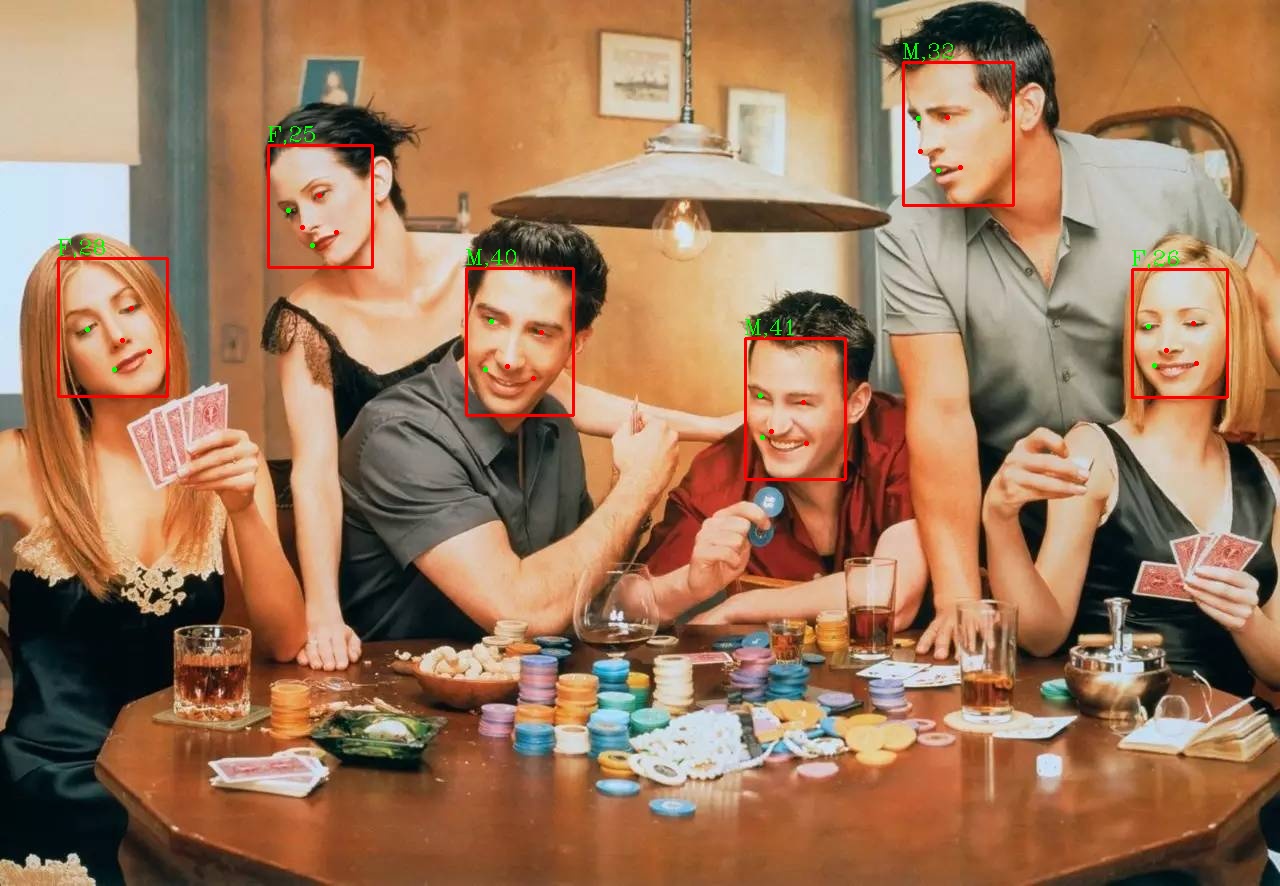

+- [x] [Gender_Age](gender_age)

+

+

+

+## Contributing

+

+We appreciate all contributions to improve the face attribute model zoo of InsightFace.

+

+

diff --git a/deploy/test.py b/attribute/gender_age/test.py

similarity index 53%

rename from deploy/test.py

rename to attribute/gender_age/test.py

index f5ecdf2..a92b216 100644

--- a/deploy/test.py

+++ b/attribute/gender_age/test.py

@@ -4,25 +4,21 @@ import sys

import numpy as np

import insightface

from insightface.app import FaceAnalysis

+from insightface.data import get_image as ins_get_image

-assert insightface.__version__>='0.2'

-parser = argparse.ArgumentParser(description='insightface test')

+parser = argparse.ArgumentParser(description='insightface gender-age test')

# general

parser.add_argument('--ctx', default=0, type=int, help='ctx id, <0 means using cpu')

args = parser.parse_args()

-app = FaceAnalysis(name='antelope')

+app = FaceAnalysis(allowed_modules=['detection', 'genderage'])

app.prepare(ctx_id=args.ctx, det_size=(640,640))

-img = cv2.imread('../sample-images/t1.jpg')

+img = ins_get_image('t1')

faces = app.get(img)

assert len(faces)==6

-rimg = app.draw_on(img, faces)

-cv2.imwrite("./t1_output.jpg", rimg)

-print(len(faces))

for face in faces:

print(face.bbox)

- print(face.kps)

- print(face.embedding.shape)

+ print(face.sex, face.age)

diff --git a/challenges/README.md b/challenges/README.md

new file mode 100644

index 0000000..b2422cc

--- /dev/null

+++ b/challenges/README.md

@@ -0,0 +1,31 @@

+## Challenges

+

+

+

+

+

+

+

+## Introduction

+

+These are challenges hold by [InsightFace](https://insightface.ai)

+

+

+

+

+

+

+

+

+## List

+

+

+Supported methods:

+

+- [LFR19 (ICCVW'2019)](iccv19-lfr)

+- [MFR21 (ICCVW'2021)](iccv21-mfr)

+- [IFRT](ifrt)

+

+

+

+

diff --git a/challenges/iccv19-lfr/README.md b/challenges/iccv19-lfr/README.md

index f9ae0e0..12aa535 100644

--- a/challenges/iccv19-lfr/README.md

+++ b/challenges/iccv19-lfr/README.md

@@ -31,7 +31,7 @@ insightface.challenge@gmail.com

*For Chinese:*

-

+

*For English:*

diff --git a/deploy/README.md b/deploy/README.md

deleted file mode 100644

index e65643d..0000000

--- a/deploy/README.md

+++ /dev/null

@@ -1,8 +0,0 @@

-InsightFace deployment README

----

-

-For insightface pip-package <= 0.1.5, we use MXNet as inference backend, please download all models from [onedrive](https://1drv.ms/u/s!AswpsDO2toNKrUy0VktHTWgIQ0bn?e=UEF7C4), and put them all under `~/.insightface/models/` directory.

-

-Starting from insightface>=0.2, we use onnxruntime as inference backend, please download our **antelope** model release from [onedrive](https://1drv.ms/u/s!AswpsDO2toNKrU0ydGgDkrHPdJ3m?e=iVgZox), and put it under `~/.insightface/models/`, so there're onnx models at `~/.insightface/models/antelope/*.onnx`.

-

-The **antelope** model release contains `ResNet100@Glint360K recognition model` and `SCRFD-10GF detection model`. Please check `test.py` for detail.

diff --git a/deploy/convert_onnx.py b/deploy/convert_onnx.py

deleted file mode 100644

index 3ed583d..0000000

--- a/deploy/convert_onnx.py

+++ /dev/null

@@ -1,40 +0,0 @@

-import sys

-import os

-import argparse

-import onnx

-import mxnet as mx

-

-print('mxnet version:', mx.__version__)

-print('onnx version:', onnx.__version__)

-#make sure to install onnx-1.2.1

-#pip uninstall onnx

-#pip install onnx==1.2.1

-assert onnx.__version__ == '1.2.1'

-import numpy as np

-from mxnet.contrib import onnx as onnx_mxnet

-

-parser = argparse.ArgumentParser(

- description='convert insightface models to onnx')

-# general

-parser.add_argument('--prefix',

- default='./r100-arcface/model',

- help='prefix to load model.')

-parser.add_argument('--epoch',

- default=0,

- type=int,

- help='epoch number to load model.')

-parser.add_argument('--input-shape', default='3,112,112', help='input shape.')

-parser.add_argument('--output-onnx',

- default='./r100.onnx',

- help='path to write onnx model.')

-args = parser.parse_args()

-input_shape = (1, ) + tuple([int(x) for x in args.input_shape.split(',')])

-print('input-shape:', input_shape)

-

-sym_file = "%s-symbol.json" % args.prefix

-params_file = "%s-%04d.params" % (args.prefix, args.epoch)

-assert os.path.exists(sym_file)

-assert os.path.exists(params_file)

-converted_model_path = onnx_mxnet.export_model(sym_file, params_file,

- [input_shape], np.float32,

- args.output_onnx)

diff --git a/deploy/face_model.py b/deploy/face_model.py

deleted file mode 100644

index 2e4a361..0000000

--- a/deploy/face_model.py

+++ /dev/null

@@ -1,67 +0,0 @@

-from __future__ import absolute_import

-from __future__ import division

-from __future__ import print_function

-

-import sys

-import os

-import argparse

-import numpy as np

-import mxnet as mx

-import cv2

-import insightface

-from insightface.utils import face_align

-

-

-def do_flip(data):

- for idx in range(data.shape[0]):

- data[idx, :, :] = np.fliplr(data[idx, :, :])

-

-

-def get_model(ctx, image_size, prefix, epoch, layer):

- print('loading', prefix, epoch)

- sym, arg_params, aux_params = mx.model.load_checkpoint(prefix, epoch)

- all_layers = sym.get_internals()

- sym = all_layers[layer + '_output']

- model = mx.mod.Module(symbol=sym, context=ctx, label_names=None)

- #model.bind(data_shapes=[('data', (args.batch_size, 3, image_size[0], image_size[1]))], label_shapes=[('softmax_label', (args.batch_size,))])

- model.bind(data_shapes=[('data', (1, 3, image_size[0], image_size[1]))])

- model.set_params(arg_params, aux_params)

- return model

-

-

-class FaceModel:

- def __init__(self, ctx_id, model_prefix, model_epoch, use_large_detector=False):

- if use_large_detector:

- self.detector = insightface.model_zoo.get_model('retinaface_r50_v1')

- else:

- self.detector = insightface.model_zoo.get_model('retinaface_mnet025_v2')

- self.detector.prepare(ctx_id=ctx_id)

- if ctx_id>=0:

- ctx = mx.gpu(ctx_id)

- else:

- ctx = mx.cpu()

- image_size = (112,112)

- self.model = get_model(ctx, image_size, model_prefix, model_epoch, 'fc1')

- self.image_size = image_size

-

- def get_input(self, face_img):

- bbox, pts5 = self.detector.detect(face_img, threshold=0.8)

- if bbox.shape[0]==0:

- return None

- bbox = bbox[0, 0:4]

- pts5 = pts5[0, :]

- nimg = face_align.norm_crop(face_img, pts5)

- return nimg

-

- def get_feature(self, aligned):

- a = cv2.cvtColor(aligned, cv2.COLOR_BGR2RGB)

- a = np.transpose(a, (2, 0, 1))

- input_blob = np.expand_dims(a, axis=0)

- data = mx.nd.array(input_blob)

- db = mx.io.DataBatch(data=(data, ))

- self.model.forward(db, is_train=False)

- emb = self.model.get_outputs()[0].asnumpy()[0]

- norm = np.sqrt(np.sum(emb*emb)+0.00001)

- emb /= norm

- return emb

-

diff --git a/deploy/model_slim.py b/deploy/model_slim.py

deleted file mode 100644

index 421b0cd..0000000

--- a/deploy/model_slim.py

+++ /dev/null

@@ -1,32 +0,0 @@

-from __future__ import absolute_import

-from __future__ import division

-from __future__ import print_function

-

-import sys

-import os

-import argparse

-import numpy as np

-import mxnet as mx

-

-parser = argparse.ArgumentParser(description='face model slim')

-# general

-parser.add_argument('--model',

- default='../models/model-r34-amf/model,60',

- help='path to load model.')

-args = parser.parse_args()

-

-_vec = args.model.split(',')

-assert len(_vec) == 2

-prefix = _vec[0]

-epoch = int(_vec[1])

-print('loading', prefix, epoch)

-sym, arg_params, aux_params = mx.model.load_checkpoint(prefix, epoch)

-all_layers = sym.get_internals()

-sym = all_layers['fc1_output']

-dellist = []

-for k, v in arg_params.iteritems():

- if k.startswith('fc7'):

- dellist.append(k)

-for d in dellist:

- del arg_params[d]

-mx.model.save_checkpoint(prefix + "s", 0, sym, arg_params, aux_params)

diff --git a/deploy/mtcnn-model/det1-0001.params b/deploy/mtcnn-model/det1-0001.params

deleted file mode 100644

index e4b04aa..0000000

Binary files a/deploy/mtcnn-model/det1-0001.params and /dev/null differ

diff --git a/deploy/mtcnn-model/det1-symbol.json b/deploy/mtcnn-model/det1-symbol.json

deleted file mode 100644

index bd9b772..0000000

--- a/deploy/mtcnn-model/det1-symbol.json

+++ /dev/null

@@ -1,266 +0,0 @@

-{

- "nodes": [

- {

- "op": "null",

- "param": {},

- "name": "data",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "10",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv1",

- "inputs": [[0, 0], [1, 0], [2, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu1_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu1",

- "inputs": [[3, 0], [4, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(2,2)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool1",

- "inputs": [[5, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "16",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv2",

- "inputs": [[6, 0], [7, 0], [8, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu2_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu2",

- "inputs": [[9, 0], [10, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "32",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv3",

- "inputs": [[11, 0], [12, 0], [13, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu3_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu3",

- "inputs": [[14, 0], [15, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv4_2_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv4_2_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(1,1)",

- "no_bias": "False",

- "num_filter": "4",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv4_2",

- "inputs": [[16, 0], [17, 0], [18, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv4_1_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv4_1_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(1,1)",

- "no_bias": "False",

- "num_filter": "2",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv4_1",

- "inputs": [[16, 0], [20, 0], [21, 0]],

- "backward_source_id": -1

- },

- {

- "op": "SoftmaxActivation",

- "param": {"mode": "channel"},

- "name": "prob1",

- "inputs": [[22, 0]],

- "backward_source_id": -1

- }

- ],

- "arg_nodes": [

- 0,

- 1,

- 2,

- 4,

- 7,

- 8,

- 10,

- 12,

- 13,

- 15,

- 17,

- 18,

- 20,

- 21

- ],

- "heads": [[19, 0], [23, 0]]

-}

\ No newline at end of file

diff --git a/deploy/mtcnn-model/det1.caffemodel b/deploy/mtcnn-model/det1.caffemodel

deleted file mode 100644

index 79e93b4..0000000

Binary files a/deploy/mtcnn-model/det1.caffemodel and /dev/null differ

diff --git a/deploy/mtcnn-model/det1.prototxt b/deploy/mtcnn-model/det1.prototxt

deleted file mode 100644

index c5c1657..0000000

--- a/deploy/mtcnn-model/det1.prototxt

+++ /dev/null

@@ -1,177 +0,0 @@

-name: "PNet"

-input: "data"

-input_dim: 1

-input_dim: 3

-input_dim: 12

-input_dim: 12

-

-layer {

- name: "conv1"

- type: "Convolution"

- bottom: "data"

- top: "conv1"

- param {

- lr_mult: 1

- decay_mult: 1

- }

- param {

- lr_mult: 2

- decay_mult: 0

- }

- convolution_param {

- num_output: 10

- kernel_size: 3

- stride: 1

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "PReLU1"

- type: "PReLU"

- bottom: "conv1"

- top: "conv1"

-}

-layer {

- name: "pool1"

- type: "Pooling"

- bottom: "conv1"

- top: "pool1"

- pooling_param {

- pool: MAX

- kernel_size: 2

- stride: 2

- }

-}

-

-layer {

- name: "conv2"

- type: "Convolution"

- bottom: "pool1"

- top: "conv2"

- param {

- lr_mult: 1

- decay_mult: 1

- }

- param {

- lr_mult: 2

- decay_mult: 0

- }

- convolution_param {

- num_output: 16

- kernel_size: 3

- stride: 1

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "PReLU2"

- type: "PReLU"

- bottom: "conv2"

- top: "conv2"

-}

-

-layer {

- name: "conv3"

- type: "Convolution"

- bottom: "conv2"

- top: "conv3"

- param {

- lr_mult: 1

- decay_mult: 1

- }

- param {

- lr_mult: 2

- decay_mult: 0

- }

- convolution_param {

- num_output: 32

- kernel_size: 3

- stride: 1

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "PReLU3"

- type: "PReLU"

- bottom: "conv3"

- top: "conv3"

-}

-

-

-layer {

- name: "conv4-1"

- type: "Convolution"

- bottom: "conv3"

- top: "conv4-1"

- param {

- lr_mult: 1

- decay_mult: 1

- }

- param {

- lr_mult: 2

- decay_mult: 0

- }

- convolution_param {

- num_output: 2

- kernel_size: 1

- stride: 1

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-

-layer {

- name: "conv4-2"

- type: "Convolution"

- bottom: "conv3"

- top: "conv4-2"

- param {

- lr_mult: 1

- decay_mult: 1

- }

- param {

- lr_mult: 2

- decay_mult: 0

- }

- convolution_param {

- num_output: 4

- kernel_size: 1

- stride: 1

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "prob1"

- type: "Softmax"

- bottom: "conv4-1"

- top: "prob1"

-}

diff --git a/deploy/mtcnn-model/det2-0001.params b/deploy/mtcnn-model/det2-0001.params

deleted file mode 100644

index a14a478..0000000

Binary files a/deploy/mtcnn-model/det2-0001.params and /dev/null differ

diff --git a/deploy/mtcnn-model/det2-symbol.json b/deploy/mtcnn-model/det2-symbol.json

deleted file mode 100644

index a13246a..0000000

--- a/deploy/mtcnn-model/det2-symbol.json

+++ /dev/null

@@ -1,324 +0,0 @@

-{

- "nodes": [

- {

- "op": "null",

- "param": {},

- "name": "data",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "28",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv1",

- "inputs": [[0, 0], [1, 0], [2, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu1_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu1",

- "inputs": [[3, 0], [4, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(3,3)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool1",

- "inputs": [[5, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "48",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv2",

- "inputs": [[6, 0], [7, 0], [8, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu2_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu2",

- "inputs": [[9, 0], [10, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(3,3)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool2",

- "inputs": [[11, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(2,2)",

- "no_bias": "False",

- "num_filter": "64",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv3",

- "inputs": [[12, 0], [13, 0], [14, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu3_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu3",

- "inputs": [[15, 0], [16, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv4_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv4_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "FullyConnected",

- "param": {

- "no_bias": "False",

- "num_hidden": "128"

- },

- "name": "conv4",

- "inputs": [[17, 0], [18, 0], [19, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu4_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu4",

- "inputs": [[20, 0], [21, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv5_2_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv5_2_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "FullyConnected",

- "param": {

- "no_bias": "False",

- "num_hidden": "4"

- },

- "name": "conv5_2",

- "inputs": [[22, 0], [23, 0], [24, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv5_1_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv5_1_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "FullyConnected",

- "param": {

- "no_bias": "False",

- "num_hidden": "2"

- },

- "name": "conv5_1",

- "inputs": [[22, 0], [26, 0], [27, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prob1_label",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "SoftmaxOutput",

- "param": {

- "grad_scale": "1",

- "ignore_label": "-1",

- "multi_output": "False",

- "normalization": "null",

- "use_ignore": "False"

- },

- "name": "prob1",

- "inputs": [[28, 0], [29, 0]],

- "backward_source_id": -1

- }

- ],

- "arg_nodes": [

- 0,

- 1,

- 2,

- 4,

- 7,

- 8,

- 10,

- 13,

- 14,

- 16,

- 18,

- 19,

- 21,

- 23,

- 24,

- 26,

- 27,

- 29

- ],

- "heads": [[25, 0], [30, 0]]

-}

\ No newline at end of file

diff --git a/deploy/mtcnn-model/det2.caffemodel b/deploy/mtcnn-model/det2.caffemodel

deleted file mode 100644

index a5a540c..0000000

Binary files a/deploy/mtcnn-model/det2.caffemodel and /dev/null differ

diff --git a/deploy/mtcnn-model/det2.prototxt b/deploy/mtcnn-model/det2.prototxt

deleted file mode 100644

index 51093e6..0000000

--- a/deploy/mtcnn-model/det2.prototxt

+++ /dev/null

@@ -1,228 +0,0 @@

-name: "RNet"

-input: "data"

-input_dim: 1

-input_dim: 3

-input_dim: 24

-input_dim: 24

-

-

-##########################

-######################

-layer {

- name: "conv1"

- type: "Convolution"

- bottom: "data"

- top: "conv1"

- param {

- lr_mult: 0

- decay_mult: 0

- }

- param {

- lr_mult: 0

- decay_mult: 0

- }

- convolution_param {

- num_output: 28

- kernel_size: 3

- stride: 1

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "prelu1"

- type: "PReLU"

- bottom: "conv1"

- top: "conv1"

- propagate_down: true

-}

-layer {

- name: "pool1"

- type: "Pooling"

- bottom: "conv1"

- top: "pool1"

- pooling_param {

- pool: MAX

- kernel_size: 3

- stride: 2

- }

-}

-

-layer {

- name: "conv2"

- type: "Convolution"

- bottom: "pool1"

- top: "conv2"

- param {

- lr_mult: 0

- decay_mult: 0

- }

- param {

- lr_mult: 0

- decay_mult: 0

- }

- convolution_param {

- num_output: 48

- kernel_size: 3

- stride: 1

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "prelu2"

- type: "PReLU"

- bottom: "conv2"

- top: "conv2"

- propagate_down: true

-}

-layer {

- name: "pool2"

- type: "Pooling"

- bottom: "conv2"

- top: "pool2"

- pooling_param {

- pool: MAX

- kernel_size: 3

- stride: 2

- }

-}

-####################################

-

-##################################

-layer {

- name: "conv3"

- type: "Convolution"

- bottom: "pool2"

- top: "conv3"

- param {

- lr_mult: 0

- decay_mult: 0

- }

- param {

- lr_mult: 0

- decay_mult: 0

- }

- convolution_param {

- num_output: 64

- kernel_size: 2

- stride: 1

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "prelu3"

- type: "PReLU"

- bottom: "conv3"

- top: "conv3"

- propagate_down: true

-}

-###############################

-

-###############################

-

-layer {

- name: "conv4"

- type: "InnerProduct"

- bottom: "conv3"

- top: "conv4"

- param {

- lr_mult: 0

- decay_mult: 0

- }

- param {

- lr_mult: 0

- decay_mult: 0

- }

- inner_product_param {

- num_output: 128

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "prelu4"

- type: "PReLU"

- bottom: "conv4"

- top: "conv4"

-}

-

-layer {

- name: "conv5-1"

- type: "InnerProduct"

- bottom: "conv4"

- top: "conv5-1"

- param {

- lr_mult: 0

- decay_mult: 0

- }

- param {

- lr_mult: 0

- decay_mult: 0

- }

- inner_product_param {

- num_output: 2

- #kernel_size: 1

- #stride: 1

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "conv5-2"

- type: "InnerProduct"

- bottom: "conv4"

- top: "conv5-2"

- param {

- lr_mult: 1

- decay_mult: 1

- }

- param {

- lr_mult: 2

- decay_mult: 1

- }

- inner_product_param {

- num_output: 4

- #kernel_size: 1

- #stride: 1

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "prob1"

- type: "Softmax"

- bottom: "conv5-1"

- top: "prob1"

-}

\ No newline at end of file

diff --git a/deploy/mtcnn-model/det3-0001.params b/deploy/mtcnn-model/det3-0001.params

deleted file mode 100644

index cae898b..0000000

Binary files a/deploy/mtcnn-model/det3-0001.params and /dev/null differ

diff --git a/deploy/mtcnn-model/det3-symbol.json b/deploy/mtcnn-model/det3-symbol.json

deleted file mode 100644

index 00061ed..0000000

--- a/deploy/mtcnn-model/det3-symbol.json

+++ /dev/null

@@ -1,418 +0,0 @@

-{

- "nodes": [

- {

- "op": "null",

- "param": {},

- "name": "data",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "32",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv1",

- "inputs": [[0, 0], [1, 0], [2, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu1_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu1",

- "inputs": [[3, 0], [4, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(3,3)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool1",

- "inputs": [[5, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "64",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv2",

- "inputs": [[6, 0], [7, 0], [8, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu2_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu2",

- "inputs": [[9, 0], [10, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(3,3)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool2",

- "inputs": [[11, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "64",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv3",

- "inputs": [[12, 0], [13, 0], [14, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu3_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu3",

- "inputs": [[15, 0], [16, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(2,2)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool3",

- "inputs": [[17, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv4_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv4_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(2,2)",

- "no_bias": "False",

- "num_filter": "128",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv4",

- "inputs": [[18, 0], [19, 0], [20, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu4_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu4",

- "inputs": [[21, 0], [22, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv5_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv5_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "FullyConnected",

- "param": {

- "no_bias": "False",

- "num_hidden": "256"

- },

- "name": "conv5",

- "inputs": [[23, 0], [24, 0], [25, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu5_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu5",

- "inputs": [[26, 0], [27, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv6_3_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv6_3_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "FullyConnected",

- "param": {

- "no_bias": "False",

- "num_hidden": "10"

- },

- "name": "conv6_3",

- "inputs": [[28, 0], [29, 0], [30, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv6_2_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv6_2_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "FullyConnected",

- "param": {

- "no_bias": "False",

- "num_hidden": "4"

- },

- "name": "conv6_2",

- "inputs": [[28, 0], [32, 0], [33, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv6_1_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv6_1_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "FullyConnected",

- "param": {

- "no_bias": "False",

- "num_hidden": "2"

- },

- "name": "conv6_1",

- "inputs": [[28, 0], [35, 0], [36, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prob1_label",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "SoftmaxOutput",

- "param": {

- "grad_scale": "1",

- "ignore_label": "-1",

- "multi_output": "False",

- "normalization": "null",

- "use_ignore": "False"

- },

- "name": "prob1",

- "inputs": [[37, 0], [38, 0]],

- "backward_source_id": -1

- }

- ],

- "arg_nodes": [

- 0,

- 1,

- 2,

- 4,

- 7,

- 8,

- 10,

- 13,

- 14,

- 16,

- 19,

- 20,

- 22,

- 24,

- 25,

- 27,

- 29,

- 30,

- 32,

- 33,

- 35,

- 36,

- 38

- ],

- "heads": [[31, 0], [34, 0], [39, 0]]

-}

\ No newline at end of file

diff --git a/deploy/mtcnn-model/det3.caffemodel b/deploy/mtcnn-model/det3.caffemodel

deleted file mode 100644

index 7b4b8a4..0000000

Binary files a/deploy/mtcnn-model/det3.caffemodel and /dev/null differ

diff --git a/deploy/mtcnn-model/det3.prototxt b/deploy/mtcnn-model/det3.prototxt

deleted file mode 100644

index a192307..0000000

--- a/deploy/mtcnn-model/det3.prototxt

+++ /dev/null

@@ -1,294 +0,0 @@

-name: "ONet"

-input: "data"

-input_dim: 1

-input_dim: 3

-input_dim: 48

-input_dim: 48

-##################################

-layer {

- name: "conv1"

- type: "Convolution"

- bottom: "data"

- top: "conv1"

- param {

- lr_mult: 1

- decay_mult: 1

- }

- param {

- lr_mult: 2

- decay_mult: 1

- }

- convolution_param {

- num_output: 32

- kernel_size: 3

- stride: 1

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "prelu1"

- type: "PReLU"

- bottom: "conv1"

- top: "conv1"

-}

-layer {

- name: "pool1"

- type: "Pooling"

- bottom: "conv1"

- top: "pool1"

- pooling_param {

- pool: MAX

- kernel_size: 3

- stride: 2

- }

-}

-layer {

- name: "conv2"

- type: "Convolution"

- bottom: "pool1"

- top: "conv2"

- param {

- lr_mult: 1

- decay_mult: 1

- }

- param {

- lr_mult: 2

- decay_mult: 1

- }

- convolution_param {

- num_output: 64

- kernel_size: 3

- stride: 1

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-

-layer {

- name: "prelu2"

- type: "PReLU"

- bottom: "conv2"

- top: "conv2"

-}

-layer {

- name: "pool2"

- type: "Pooling"

- bottom: "conv2"

- top: "pool2"

- pooling_param {

- pool: MAX

- kernel_size: 3

- stride: 2

- }

-}

-

-layer {

- name: "conv3"

- type: "Convolution"

- bottom: "pool2"

- top: "conv3"

- param {

- lr_mult: 1

- decay_mult: 1

- }

- param {

- lr_mult: 2

- decay_mult: 1

- }

- convolution_param {

- num_output: 64

- kernel_size: 3

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "prelu3"

- type: "PReLU"

- bottom: "conv3"

- top: "conv3"

-}

-layer {

- name: "pool3"

- type: "Pooling"

- bottom: "conv3"

- top: "pool3"

- pooling_param {

- pool: MAX

- kernel_size: 2

- stride: 2

- }

-}

-layer {

- name: "conv4"

- type: "Convolution"

- bottom: "pool3"

- top: "conv4"

- param {

- lr_mult: 1

- decay_mult: 1

- }

- param {

- lr_mult: 2

- decay_mult: 1

- }

- convolution_param {

- num_output: 128

- kernel_size: 2

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "prelu4"

- type: "PReLU"

- bottom: "conv4"

- top: "conv4"

-}

-

-

-layer {

- name: "conv5"

- type: "InnerProduct"

- bottom: "conv4"

- top: "conv5"

- param {

- lr_mult: 1

- decay_mult: 1

- }

- param {

- lr_mult: 2

- decay_mult: 1

- }

- inner_product_param {

- #kernel_size: 3

- num_output: 256

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-

-layer {

- name: "drop5"

- type: "Dropout"

- bottom: "conv5"

- top: "conv5"

- dropout_param {

- dropout_ratio: 0.25

- }

-}

-layer {

- name: "prelu5"

- type: "PReLU"

- bottom: "conv5"

- top: "conv5"

-}

-

-

-layer {

- name: "conv6-1"

- type: "InnerProduct"

- bottom: "conv5"

- top: "conv6-1"

- param {

- lr_mult: 1

- decay_mult: 1

- }

- param {

- lr_mult: 2

- decay_mult: 1

- }

- inner_product_param {

- #kernel_size: 1

- num_output: 2

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "conv6-2"

- type: "InnerProduct"

- bottom: "conv5"

- top: "conv6-2"

- param {

- lr_mult: 1

- decay_mult: 1

- }

- param {

- lr_mult: 2

- decay_mult: 1

- }

- inner_product_param {

- #kernel_size: 1

- num_output: 4

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "conv6-3"

- type: "InnerProduct"

- bottom: "conv5"

- top: "conv6-3"

- param {

- lr_mult: 1

- decay_mult: 1

- }

- param {

- lr_mult: 2

- decay_mult: 1

- }

- inner_product_param {

- #kernel_size: 1

- num_output: 10

- weight_filler {

- type: "xavier"

- }

- bias_filler {

- type: "constant"

- value: 0

- }

- }

-}

-layer {

- name: "prob1"

- type: "Softmax"

- bottom: "conv6-1"

- top: "prob1"

-}

diff --git a/deploy/mtcnn-model/det4-0001.params b/deploy/mtcnn-model/det4-0001.params

deleted file mode 100644

index efca9a9..0000000

Binary files a/deploy/mtcnn-model/det4-0001.params and /dev/null differ

diff --git a/deploy/mtcnn-model/det4-symbol.json b/deploy/mtcnn-model/det4-symbol.json

deleted file mode 100644

index aa90e2a..0000000

--- a/deploy/mtcnn-model/det4-symbol.json

+++ /dev/null

@@ -1,1392 +0,0 @@

-{

- "nodes": [

- {

- "op": "null",

- "param": {},

- "name": "data",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "SliceChannel",

- "param": {

- "axis": "1",

- "num_outputs": "5",

- "squeeze_axis": "False"

- },

- "name": "slice",

- "inputs": [[0, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_1_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_1_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "28",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv1_1",

- "inputs": [[1, 0], [2, 0], [3, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu1_1_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu1_1",

- "inputs": [[4, 0], [5, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(3,3)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool1_1",

- "inputs": [[6, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_1_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_1_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "48",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv2_1",

- "inputs": [[7, 0], [8, 0], [9, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu2_1_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu2_1",

- "inputs": [[10, 0], [11, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(3,3)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool2_1",

- "inputs": [[12, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_1_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_1_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(2,2)",

- "no_bias": "False",

- "num_filter": "64",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv3_1",

- "inputs": [[13, 0], [14, 0], [15, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu3_1_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu3_1",

- "inputs": [[16, 0], [17, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_2_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_2_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "28",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv1_2",

- "inputs": [[1, 1], [19, 0], [20, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu1_2_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu1_2",

- "inputs": [[21, 0], [22, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(3,3)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool1_2",

- "inputs": [[23, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_2_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_2_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "48",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv2_2",

- "inputs": [[24, 0], [25, 0], [26, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu2_2_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu2_2",

- "inputs": [[27, 0], [28, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(3,3)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool2_2",

- "inputs": [[29, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_2_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_2_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(2,2)",

- "no_bias": "False",

- "num_filter": "64",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv3_2",

- "inputs": [[30, 0], [31, 0], [32, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu3_2_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu3_2",

- "inputs": [[33, 0], [34, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_3_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_3_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "28",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv1_3",

- "inputs": [[1, 2], [36, 0], [37, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu1_3_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu1_3",

- "inputs": [[38, 0], [39, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(3,3)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool1_3",

- "inputs": [[40, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_3_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_3_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "48",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv2_3",

- "inputs": [[41, 0], [42, 0], [43, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu2_3_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu2_3",

- "inputs": [[44, 0], [45, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(3,3)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool2_3",

- "inputs": [[46, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_3_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_3_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(2,2)",

- "no_bias": "False",

- "num_filter": "64",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv3_3",

- "inputs": [[47, 0], [48, 0], [49, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu3_3_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu3_3",

- "inputs": [[50, 0], [51, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_4_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_4_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "28",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv1_4",

- "inputs": [[1, 3], [53, 0], [54, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu1_4_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu1_4",

- "inputs": [[55, 0], [56, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(3,3)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool1_4",

- "inputs": [[57, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_4_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_4_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "48",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv2_4",

- "inputs": [[58, 0], [59, 0], [60, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu2_4_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu2_4",

- "inputs": [[61, 0], [62, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(3,3)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool2_4",

- "inputs": [[63, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_4_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_4_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(2,2)",

- "no_bias": "False",

- "num_filter": "64",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv3_4",

- "inputs": [[64, 0], [65, 0], [66, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu3_4_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu3_4",

- "inputs": [[67, 0], [68, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_5_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv1_5_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "28",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv1_5",

- "inputs": [[1, 4], [70, 0], [71, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu1_5_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu1_5",

- "inputs": [[72, 0], [73, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(3,3)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool1_5",

- "inputs": [[74, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_5_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv2_5_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(3,3)",

- "no_bias": "False",

- "num_filter": "48",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv2_5",

- "inputs": [[75, 0], [76, 0], [77, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu2_5_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu2_5",

- "inputs": [[78, 0], [79, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Pooling",

- "param": {

- "global_pool": "False",

- "kernel": "(3,3)",

- "pad": "(0,0)",

- "pool_type": "max",

- "pooling_convention": "full",

- "stride": "(2,2)"

- },

- "name": "pool2_5",

- "inputs": [[80, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_5_weight",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "conv3_5_bias",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "Convolution",

- "param": {

- "cudnn_off": "False",

- "cudnn_tune": "off",

- "dilate": "(1,1)",

- "kernel": "(2,2)",

- "no_bias": "False",

- "num_filter": "64",

- "num_group": "1",

- "pad": "(0,0)",

- "stride": "(1,1)",

- "workspace": "1024"

- },

- "name": "conv3_5",

- "inputs": [[81, 0], [82, 0], [83, 0]],

- "backward_source_id": -1

- },

- {

- "op": "null",

- "param": {},

- "name": "prelu3_5_gamma",

- "inputs": [],

- "backward_source_id": -1

- },

- {

- "op": "LeakyReLU",

- "param": {

- "act_type": "prelu",

- "lower_bound": "0.125",

- "slope": "0.25",

- "upper_bound": "0.334"

- },

- "name": "prelu3_5",

- "inputs": [[84, 0], [85, 0]],

- "backward_source_id": -1

- },

- {

- "op": "Concat",

- "param": {

- "dim": "1",

- "num_args": "5"

- },

- "name": "concat",